Facebook Open Sources Its Servers and Data Centers. Facebook has shared many details of its new server and data center design on Building Efficient Data Centers with the Open Compute Project article and project. Open Compute Project effort will bring this web scale computing to the masses. The new data center is designed for AMD and Intel and the x86 architecture.

You might ask Why Facebook open-sourced its datacenters? The answer is that Facebook has opened up a whole new front in its war with Google over top technical talent and ad dollars. “By releasing Open Compute Project technologies as open hardware,” Facebook writes, “our goal is to develop servers and data centers following the model traditionally associated with open source software projects. Our first step is releasing the specifications and mechanical drawings. The second step is working with the community to improve them.”

By the by this data center approach has some similarities to Google data center designs, at least to details they have published. Despite Google’s professed love for all things open, details of its massive data centers have always been a closely guarded secret. Google usually talks about its servers once they’re obsolete.

Open Compute Project is not the first open source server hardware project. How to build cheap cloud storage article shows another interesting project.

107 Comments

Tomi Engdahl says:

Can Open Hardware Transform the Data Center?

http://hardware.slashdot.org/story/11/10/30/0614234/can-open-hardware-transform-the-data-center

Is the data center industry on the verge of a revolution in which open source hardware designs transform the process of designing and building these facilities? This week the Open Compute Project gained momentum and structure, forming a foundation as it touted participation from IT heavyweights Intel, Dell, Amazon, Facebook, Red Hat and Rackspace.

Can Open Hardware Transform the Data Center?

http://www.datacenterknowledge.com/archives/2011/10/28/can-open-hardware-transform-the-data-center/

Is the data center industry on the verge of a revolution in which open source hardware designs transform the process of designing and building data centers? The Open Compute Project, an initiative begun in April by Facebook, is gaining partners, momentum and structure. Yesterday it unveiled a new foundation and board to shepherd the burgeoning movement.

If the project doesn’t succeed, it won’t be for lack of support. Yesterday’s second Open Compute Summit in New York featured appearances from executives for some of the sector’s leading names – Intel, Dell, Amazon, Facebook, Red Hat and Goldman Sachs. The audience was filled with data center thought leaders from Google, Microsoft, Rackspace and many other companies with large data center operations.

There are signs that the Open Compute designs could become more practical for a broader array of data center customers in the future.

Missing from the dais were companies specializing in power, cooling and mechanical design – areas where Open Compute designs are being shared.

Tomi Engdahl says:

Here is an interesting article that shows details of “Open Compute” Server and compares it to a HP server

Facebook’s “Open Compute” Server tested

http://www.anandtech.com/show/4958/facebooks-open-compute-server-tested

The Facebook Open Compute server design is ambitious: “The result is a data center full of vanity free servers which is 38% more efficient and 24% less expensive to build and run than other state-of-the-art data centers.” Even better is that Facebook Engineering sent two of these Open Compute servers to our lab for testing, allowing us to see how these servers compare to other solutions in the market.

The Facebook Open Compute servers have made quite an impression on us. Remember, this is Facebook’s first attempt to build a cloud server!

Comments:

It seems to me that the HP server is doing as well as the Facebook ones. Considering it has more featuers (remote management, integrated graphics) and a “common” PSU.

tomi says:

HP: Hard drive shortages hitting Google, Facebook DIY servers

http://www.zdnet.com/blog/btl/hp-hard-drive-shortages-hitting-google-facebook-diy-servers/64026

The do-it-yourself server crowd is apparently having trouble procuring hard drives due to the floods in Thailand.

Google and Facebook may already be squeezed by hard drive shortages, says HP’s CEO.

Tomi Engdahl says:

Facebook s new energy efficient data center

http://www.youtube.com/watch?v=a-xI4w0eR0Y&feature=related

Inside Facebook’s Server Room

http://www.youtube.com/watch?v=nhOo1ZtrH8c&feature=related

data center facebook

http://www.youtube.com/watch?v=-DRxqHrPrFw&feature=related

Tomi Engdahl says:

Facebook’s Oregon Data Center Uses As Much Power As Entire County

http://hardware.slashdot.org/story/12/01/31/0355228/facebooks-oregon-data-center-uses-as-much-power-as-entire-county

“The first phase of the Facebook data center in Oregon uses 28 megawatts of utility power, local officials said this week. That’s not extraordinary for a facility of that size in most data center hubs. But it stands out in Crook County, Oregon where all the homes and business other than Facebook use 30 megawatts of power.

How Clean is Your Cloud and Telecom? « Tomi Engdahl’s ePanorama blog says:

[...] of the facilities and the thousands of computers that go inside. However, despite significant improvements in efficiency, the exponential growth in cloud computing far outstrips these energy [...]

Tomi Engdahl says:

Facebook unfriends 19-inch data center racks

http://www.theregister.co.uk/2012/05/02/open_compute_summit_open_rack/

Social media giant Facebook had built precisely one data center in its short life, the one in Prineville, Oregon, before it had had enough of an industry standard that was part of the railroad infrastructure and then the telephone infrastructure build outs and bubbles: The 19-inch rack for mounting electronic equipment.

At the Open Compute Summit in San Antonio, Texas, Frank Frankovsky, director of hardware design and supply chain at Facebook and one of the founders of the Open Compute Project, said it was time to get rid of the old 19-inch rack and give it a skosh more room – two inches to be precise – to do a better job of packing compute, storage, and networking gear in data centers and provide better airflow over components.

By stick with the current 19-inch racks and their limitations, “we all end up with racks gone bad,” explained Frankovsky

Given the tech industry experience with this sort of rack – and the fact that equipment rooms, tools, and techniques for bending metal to make electronics were all standardized on 19-inch racks, it made sense for the computer industry to adopt the same form factors when machines started getting racked up in volume in the late 1980s.

The rollout today of the Open Rack standard being proposed by the OCP is just one in what will no doubt be a lot of standards that are re-thought in the next several years by companies building hyperscale data centers.

The problem with 19-inch racks is that people try to cram too much gear into them, and they often end up poking out the back of the rack, which messes up the hot aisles in data centers. Maybe you can get a parts cart down the aisle now, and maybe you can’t. And racks are also packed with a bazillion network cables and heavy power cables, which makes maintenance of the machines in a rack difficult.

“Blades were a great promise,” said Frankovsky. “Companies needed help, and once blades came out, they said, ‘Please don’t help us anymore.’” By going back to the drawing board and coming up with the Open Rack design, Frankovsky says that the engineers at the social media company have come up with a scheme that is “blades done right.”

The OCP wants for vendors to adopt the Open Rack standard, but they may never do it except for the hyperscale data center customers that might pick up the Facebook designs and use them in their own data centers (or adapt the ideas with tweaks).

“Let’s face it, guys. There’s only so many different ways to bend metal,” said Frankovsky, referring to the ways that vendors try to tweak their rack designs to make them a little bit different and how they did not standardize (as they could have) on blade server and chassis form factors a decade ago. “By completely standardizing the mechanicals and electricals, this is going to help us stay away from racks gone bad.”

By going with a 21-inch wide rack design, the Open Rack can put five 3.5-inch drives across horizontally inside of a single server tray. Moreover, it can also put three skinny two-socket server nodes across on a tray and still have plenty of room for memory slots. Considering that the 3.5-inch disk is still the cheapest and densest storage device

The Open Rack also has power trays that are separate from the servers, which allows servers to be even denser and gives more flexibility in what you can put in the rack in terms of servers, storage, and networking. The idea is that when a new processor from Intel or AMD comes out, you replace as few of the components as possible to get the CPU upgrade and leave everything else in place.

Chinese web powerhouses Tencent, Baidu, and Alibaba were already working on their own custom rack designs that have some features similar to the Open Rack design, called “Project Scorpio,” and Frankovsky said in a blog post that the two camps were working out how to converge their respective racks to a single standard by 2013.

Tomi Engdahl says:

Building Efficient Data Centers with the Open Compute Project

http://www.facebook.com/note.php?note_id=10150144039563920

This meant we could:

Use a 480-volt electrical distribution system to reduce energy loss.

Remove anything in our servers that didn’t contribute to efficiency.

Reuse hot aisle air in winter to both heat the offices and the outside air flowing into the data center.

Eliminate the need for a central uninterruptible power supply.

The result is that our Prineville data center uses 38 percent less energy to do the same work as Facebook’s existing facilities, while costing 24 percent less.

Inspired by the model of open source software, we want to share the innovations in our data center for the entire industry to use and improve upon. Today we’re also announcing the formation of the Open Compute Project, an industry-wide initiative to share specifications and best practices for creating the most energy efficient and economical data centers.

Tomi Engdahl says:

Open Compute Developing Wider Rack Standard

http://hardware.slashdot.org/story/12/05/03/1417232/open-compute-developing-wider-rack-standard

“Are you ready for wider servers? The Open Compute Project today shared details on Open Rack, a new standard for hyperscale data centers, which will feature 21-inch server slots, rather than the traditional 19 inches. “We are ditching the 19-inch rack standard,”

Tomi Engdahl says:

Why Open Compute Is a Win For Rackspace

http://hardware.slashdot.org/story/12/05/24/2328249/why-open-compute-is-a-win-for-rackspace

“Cloud provider Rackspace is looking to the emerging open source hardware ecosystem to transform its data centers. The cloud provider spends $200 million a year on servers and storage, and sees the Open Compute Project as the key to reducing its costs on hardware design and operations.”

‘I think the OEMs were not very interested (in Open Compute) initially,’ said Rackspace COO Mark Roenigk. ‘But in the last six months they have become really focused.’

Tomi Engdahl says:

How Open Compute is a Win for Rackspace

http://www.datacenterknowledge.com/archives/2012/05/23/how-open-compute-is-a-win-for-rackspace/

Rackspace President Lew Moorman discusses the company’s support for the Open Compute Project during the project’s recent summit in San Antonio.

In the battle for the hyper-scale data center, long-dominant server OEMs like Dell and HP are doing battle with a growing challenge from firms offering custom server designs. If you’re looking for the front lines in this battle, look no farther than companies like Rackspace Hosting.

Rackspace is one of the fastest-growing cloud computing providers. The San Antonio company spent $202 million on servers and storage for customers over the past year, adding more than 12,000 servers in its data centers.

Rackspace is also keen on increasing its use of technology based on the Open Compute Project (OCP), which is developing efficient open source designs for servers, storage and data centers. The company believes that the OCP designs can help it reduce costs on hardware design and operations.

But who will build that hardware for Rackspace? That’s a multi-million dollar question that demonstrates the potential value of the Open Compute initiative for service providers and hyper-scale users. Rackspace currently has its servers customized by HP and Dell, but is also having conversations with original design manufacturers (ODMs) like Quanta and Wistron about their capabilities.

This growing vendor ecosystem creates options for Rackspace, according to Mark Roenigk, Chief Operating Officer of Rackspace, who says it has prompted incumbent OEMs to step up their game when it comes to Open Compute hardware.

“I think the OEMs were not very interested (in Open Compute) initially,” said Roenigk. “But in the last six months they have become really focused. I think we have a lot to do with that. We have Dell and HP engineers on-site now.”

When Rackspace launched its cloud computing operation, it had no supply chain beyond its relationships with Dell, HP and Synnex, Roenigk said. But that’s changed.

“Thus far we’ve taken bits and pieces of the Open Compute designs and incorporated them into our solution,” said Roenigk. ”Our hope and intention is that the Open Compute Project standardizes our design. We have no ambition to be in the hardware business. Open Compute can fill that gap.”

“Rackspace is totally committed to the Open Compute Project,” said Moorman. “We want efficiency and rapid innovation, and we can’t do it alone.”

And what about price savings on servers? While companies developing OCP designs like Quanta, Wistron and Hyve will be tough competitors, Roenigk says the key will be how HP and Dell deliver on their commitment to Open Compute. And price may not be the deciding factor.

“If HP and Dell can price something near where Quanta can, then I don’t have to call eight other guys to make it work,” said Roenigk. “Speed is important, because we’re growing so rapidly. In some ways, it’s more important than price. It’s a value proposition. There are times when I would rather get something now than get a better price later.”

“I was skeptical out of the gate,” he said. “I truly believe it’s real now. I think we’re seeing a fundamental shift I’ve not seen anything like in my 20 years in the business.”

Tomi Engdahl says:

Inside Facebook security: defending users from spammers, hackers, and ‘likejackers’

http://www.theverge.com/2012/5/25/2996321/inside-facebook-likejackers-spammers-hackers

If Facebook were a country, it would be the third largest in the world, just behind India and China. And like any country, Facebook has a police force to keep things under control. 300 people have been entrusted with the responsibility of keeping a 900-million-person virtual society from itself and from external forces.

Facebook’s deal with the world’s biggest anti-virus companies to include their blacklists in Facebook’s URL-scanning database got us thinking about other things the company does behind the scenes to keep its users safe, because a hacked, spammed, and depressed user isn’t coming back for more.

“Creating friction is the key to making users aware of what they’re actually doing,” Facebook Security and Safety team member Fred Wolens said, because a vast majority percent of “hacked” Facebook accounts don’t get hacked on Facebook.

Facebook starts by scanning the usual suspects of PasteBin-esque websites weekly to check for hackers dumping thousands of usernames and passwords. Facebook cross references credential dumps with its entire database of user credentials, then alerts any users that match to change their passwords.

Another measure Facebook takes is stripping every user of their referral URL when they click one of the two trillion links posted to Facebook every day.

A popular and nefarious way that spammers manipulate you is by putting invisible Like buttons on top of real buttons you can see like “Download File.” For example, if you’re trying to pirate an album from a suspicious site, the Download link might actually be a Like button that subscribes you to content from that site. Without even knowing it, you are liking a page and thus polluting your friends’s News Feeds with a spam post

Facebook responds to “likejacking” by sometimes showing a pop up that confirms whether or not you meant to Like that website.

The goal of many of these spammers is to generate impressions, just like banner ads do for content farm websites. Spammers get paid every time somebody clicks a link and sees an ad

When somebody has accidentally liked a page or clicked a nefarious link, it’s unlikely that their Facebook account will be compromised. The real problem is that most people use the same username and password on most sites they sign up for. When a user’s credentials for another site are stolen, thieves simply try them on banking sites and social networks like Facebook.

When someone friends you on Facebook, that request doesn’t always get through to your inbox. Facebook employs a complex algorithm to decide the likelihood that you know somebody, and whether or not to push through a friend request or file it as spam inside your “See All Friend Requests” folder.

Facebook’s database of malicious links contains billions of bad URLs, and its spam filters are precise enough that just .5 percent of users see spam on a given day, by its estimates

The difference for now is that we’re all choosing to use Facebook and explicitly accepting the company’s monitoring and control — they’re unfortunate preconditions of the virtual society. Without these rules, a site that entertains us for hours each day might descend into a spam and crap-filled cesspool, which isn’t very fun. And unlike the real world, if these rules change, it’s a lot easier to delete your Facebook profile than it is to relocate to another country.

Tomi Engdahl says:

Facebook smacks away hardness, sticks MySQL stash on flash

Replaces HDD with Fusion-io flashiness

http://www.theregister.co.uk/2012/05/31/facebook_fusion_io/

Facebook is using Fusion-io server flash cards in its datacentres to store MySQL data as well as process it faster because it’s better than using disk drives.

Piper Jaffray analyst Andrew Nowinski tells us that Facebook is using Fusion-io ioDrive PCIe flash cards for capacity as well as performance acceleration through flash caching

“The performance benefits of the Fusion-io solution greatly exceeded the HDD solution, making the price/performance much more attractive.”

Facebook is now “hosting [its] MySQL databases on Fusion-io cards, rather than using an HDD solution from LSI.” Nowinski tells us one reason is that MySQL has a journalling system wherein each row of data is written twice, once into the database table and again into the journal for redundancy and protection against a write failure. This uses up storage capacity and lowers performance.

He says: “By leveraging Fusion-io cards and the new SDK, customers can turn off this journalling system, since the Fusion-io cards also have a patented logging system that protects data in the event of power failure. By turning off journalling, management stated that customers can reduce the amount of data that is stored by 50 per cent, increase throughput by 33 per cent and decrease latency by 50 per cent versus the alternative HDD storage.”

Tomi Engdahl says:

Facebook likes wimpy cores, CPU subscriptions

http://www.eetimes.com/electronics-news/4375880/Facebook-likes-wimpy-cores–CPU-subscriptions

Facebook could start running–at least in part–on so-called wimpy server CPU cores by the second half of 2013. Long term, the company wants to move to a systems architecture that lets it upgrade CPUs independent of memory and networking components, buying processors on a subscription model.

The social networking giant will not reveal whether it will use ARM, MIPS-like or Atom-based CPUs first. But it does plan to adopt so-called wimpy cores over time to replace some of the workloads currently run on more traditional brawny cores such as Intel Xeon server processors.

“It will be a journey [for the wimpy cores] starting with less intensive CPU jobs [such as storage and I/O processing] and migrating to more CPU-intensive jobs,” said Frank Frankovsky

“We’re testing everything, and we don’t have any religion on processor architectures,” Frankovsy said.

Facebook published a white paper last year reporting on its tests that showed the MIPS-like Tilera multicore processors provided a 24 percent benefit in performance per watt per dollar when running memached jobs. Tilera is “the furthest along” of all the offerings, and they are “production ready today” with 64-bit support, he said.

Frankovsky noted several ARM SoCs and alternatives from both Intel and AMD are also “in the hunt.”

Tomi Engdahl says:

A rare look inside Facebook’s Oregon data center [photos, video]

http://gigaom.com/cleantech/a-rare-look-inside-facebooks-oregon-data-center-photos-video/

In a rare visit to Facebook’s Prineville data center on Thursday, the temperature hit a high of 93 degrees outside

Building No. 1 of the data center was as noisy as an industrial factory, with air flowing through the cooling rooms and the humidifier room spraying purified water onto the incoming air at a rapid rate. It’s like peeking inside a brand new Porsche while it’s being driven at its fullest capacity.

Facebook’s data center here is one of the most energy efficient in the world. The social network invested $210 million for just the first phase of the data center, which GigaOM got a chance to check out during a two-hour tour.

Building No. 1 is where Facebook first started designing its ultra-efficient data centers and gear, and where it wanted the first round of servers that it open sourced under the Open Compute Project to live. Since then — Building No. 1 was opened up in the spring of 2011

Facebook has slightly tweaked its designs for Building No. 2 at the Prineville site, as well as the designs for its data centers in North Carolina and Sweden. Building No. 2 will use a new type of cooling system

Facebook is currently investing heavily in its infrastructure boom. It now has an estimated 180,000 servers for its 900 million plus users — that’s up from its estimated 30,000 in the winter of 2009, and 60,000 in the summer of 2010.

learning how to be an infrastructure company.

Tomi Engdahl says:

Open Compute Project Publishes Final Open Rack Spec

http://hardware.slashdot.org/story/12/09/19/2123219/open-compute-project-publishes-final-open-rack-spec

The Open Compute Project has published the final specification of the Open Rack Specification, which widens the traditional server rack to more than 23 inches. Specifically, the rack is 600 mm wide (versus the 482.6 mm of a 19-inch rack), with the chassis guidelines calling for a width of 537 mm. All told, that’s slightly wider than the 580 mm used by the Western Electric or ETSI rack.

Open Rack 1.0 Specification Available Now

http://opencompute.org/2012/09/18/open-rack-1-0-specification-available-now/

Tomi Engdahl says:

The Computer Science Behind Facebook’s 1 Billion Users

http://developers.slashdot.org/story/12/10/05/233254/the-computer-science-behind-facebooks-1-billion-users

“Much has been made about Facebook hitting 1 billion users. But Businessweek has the inside story detailing how the site actually copes with this many people and the software Facebook has invented that pushes the limits of computer science.”

Facebook: The Making of 1 Billion Users

http://www.businessweek.com/articles/2012-10-04/facebook-the-making-of-1-billion-users

Tomi Engdahl says:

Open Compute Project Hosts Hackathon

http://hackaday.com/2012/12/11/open-compute-project-hosts-hackathon/

The folks at Open Compute Project are running their annual summit in January, but this year they’ll be adding a hardware hackathon to the program. The hackathon’s goal is to build open source hardware that can be applied to data centers to increase efficiency and reduce costs.

Tomi Engdahl says:

Open Compute ‘Group Hug’ Board Allows Swappable CPUs In Servers

http://hardware.slashdot.org/story/13/01/16/2035207/open-compute-group-hug-board-allows-swappable-cpus-in-servers

“AMD, Intel, ARM: for years, their respective CPU architectures required separate sockets, separate motherboards, and in effect, separate servers. But no longer: Facebook and the Open Compute Summit have announced a common daughtercard specification that can link virtually any processor to the motherboard.

Tomi Engdahl says:

“Group Hug” Board Allows Swappable CPUs in Servers

http://slashdot.org/topic/datacenter/group-hug-board-allows-swappable-cpus-in-servers/

It’s finally happened: a swappable daughtercard will allow AMD, Intel, and even ARM CPUs to be interchanged, thanks to a new Open Compute specification.

AMD, Intel, ARM: for years, their respective CPU architectures required separate sockets, separate motherboards, and in effect, separate servers. But no longer: Facebook and the Open Compute Summit have announced a common daughtercard specification that can link virtually any processor to the motherboard.

AMD, Applied Micro, Intel, and Calxeda have already embraced the new board, dubbed “Group Hug.” Hardware designs based on the technology will reportedly appear at the show. The Group Hug card will be connected via a simple x8 PCI Express connector to the main motherboard.

It’s hard to overstate the potential of the technology. Although many other components within a server—including power supplies, hard drives, memory and I/O cards—have long been replaceable, processors have not.

while a standard has been provided, it may be some time before the real-world appearance of servers built on the technology.

Customization of servers will increase exponentially as a result

Tomi Engdahl says:

Intel, Facebook collaborate on new data center rack technologies

http://www.cablinginstall.com/articles/2013/january/intel-facebook-data-center-rack.html

Intel and Facebook announced that the companies are collaborating to define the next generation of data center rack technologies to enable the disaggregation of computing, network and storage resources. In a follow-on announcement, Quanta Computer unveiled a mechanical prototype of the companies’ new silicon photonics rack architecture, the better to demonstrate the potential for total cost, design and reliability improvements via disaggregation.

Rack disaggregation refers to the separation of those resources that currently exist in a rack, including computing, storage, networking, and power distribution into discrete modules.

The mechanical prototype from Quanta Computer is a demonstration of Intel’s photonic rack architecture for interconnecting the various resources, showing one of the ways computing, network and storage resources can be disaggregated within a rack. Further, Intel says it will contribute a design for enabling a photonic receptacle to the Open Compute Project (OCP), and will work with Facebook, Corning and others over time to standardize the design.

Tomi Engdahl says:

Who needs HP and Dell? Facebook now designs all its own servers

Facebook’s newest data center won’t have any OEM servers.

http://arstechnica.com/information-technology/2013/02/who-needs-hp-and-dell-facebook-now-designs-all-its-own-servers/

Nearly two years ago, Facebook unveiled what it called the Open Compute Project. The idea was to share designs for data center hardware like servers, storage, and racks so that companies could build their own equipment instead of relying on the narrow options provided by hardware vendors.

While anyone could benefit, Facebook led the way in deploying the custom-made hardware in its own data centers. The project has now advanced to the point where all new servers deployed by Facebook have been designed by Facebook itself or designed by others to Facebook’s demanding specifications. Custom gear today takes up more than half of the equipment in Facebook data centers. Next up, Facebook will open a 290,000-square-foot data center in Sweden stocked entirely with servers of its own design, a first for the company.

“It’s the first one where we’re going to have 100 percent Open Compute servers inside,” Frank Frankovsky, VP of hardware design and supply chain operations at Facebook, told Ars in a phone interview this week.

At Facebook’s scale, it’s cheaper to maintain its own data centers than to rely on cloud service providers, he noted. Moreover, it’s also cheaper for Facebook to avoid traditional server vendors.

Like Google, Facebook designs its own servers and has them built by ODMs (original design manufacturers) in Taiwan and China, rather than OEMs (original equipment manufacturers) like HP or Dell. By rolling its own, Facebook eliminates what Frankovsky calls “gratuitous differentiation,” hardware features that make servers unique but do not benefit Facebook.

It could be as simple as the plastic bezel on a server with a brand logo, because that extra bit of material forces the fans to work harder. Frankovsky said a study showed a standard 1U-sized OEM server “used 28 watts of fan power to pull air through the impedance caused by that plastic bezel,” whereas the equivalent Open Compute server used just three watts for that purpose.

A path for HP and Dell: Adapt to Open Compute

That doesn’t mean Facebook is swearing off HP and Dell forever. “Most of our new gear is built by ODMs like Quanta,” the company said in an e-mail response to one of our follow-up questions. “We do multi-source all our gear, and if an OEM can build to our standards and bring it in within 5 percent, then they are usually in those multi-source discussions.”

HP and Dell have begun making designs that conform to Open Compute specifications, and Facebook said it is testing one from HP to see if it can make the cut. The company confirmed, though, that its new data center in Sweden will not include any OEM servers when it opens.

Tomi Engdahl says:

Rackspace: Why we’re designing our own cloud servers

Just what will it take to compete with Amazon and Google

http://www.theregister.co.uk/2013/03/18/rackspace_server_fleet_open_compute/

Any cloud computing provider that wants to operate at scale and compete against its peers is under pressure to build some kind of custom hardware. It may, in fact, be necessary to compete at all.

That is what Rackspace, which is making the transition from website hosting to cloud systems, believes.

And that’s why the San Antonio, Texas-based company started up OpenStack – the open-source cloud controller software project – with NASA nearly three years ago, and accepted an invitation from Facebook to join the Open Compute Project, an effort by the social network to design open-source servers and storage and the data centres in which they run.

“We took the model of putting servers up on racks very quickly and turning them on in 24 hours and we called it managed hosting. At the time, all of our founders at Rackspace were Linux geeks and they were all do-it-yourselfers, and they were literally building white-box servers. They were buying motherboards, processors, and everything piecemeal, and we assembled these tower-chassis form-factors on metal bread racks and it was really not very sexy.”

“We mimicked what the enterprise would do in their data centre to go win business from those enterprises,” said Engates. “Enterprises didn’t want to think they were being put on a white-box, homemade server. They wanted a real server with redundant power supplies and all that fancy stuff.”

Rack servers evolved and matured

“Now,” said Engates, “we are basically back to our own designs because it really doesn’t make a lot of sense to put cloud customers on enterprise gear. Clouds are different animals – they are architected and built differently, customers have different expectations, and the competition is doing different things.”

What is good for Facebook is not perfect for Rackspace, as the latter explained at the Open Compute Summit back in January, but the basic rack and server designs can be tweaked to fit the needs of a managed hosting and public cloud provider.

Rackspace has been pretty quiet about what it has been doing with Open Compute up until earlier this year, and part of that is Rackspace’s decision to radically change its business with both OpenStack and Open Compute.

The custom Open Compute machines cost anywhere from 18 to 22 per cent less to build than the bespoke boxes from HP and Dell that make up about 18 per cent of the Rackspace server fleet, which is about 16,300 of the 90,525 boxes that were running at the end of December across the cloud company’s data centres.

“We have all kinds of horses in the barn, but for the past 18 months, we have been only dealing in high-density compute with boxes completely maxed out. We will run almost 20 kilowatts per cabinet, so it is very dense compute,” said Roenigk.

Tomi Engdahl says:

Facebook revealed as company behind $1.5 billion Altoona project

http://www.desmoinesregister.com/article/20130419/BUSINESS/130419046/Facebook-revealed-company-behind-1-5-billion-Altoona-project?gcheck=1&nclick_check=1

Facebook is the company behind a $1.5 billion data center that’s expected to land Altoona, a project that’s being touted as “the most technologically advanced data center in the world,” legislative sources told the Des Moines Register today.

The project is expected to be completed in two $500 million phases near Altoona, where leaders have provided a green light for a 1.4 million square foot facility. When completely built out, experts expect the facility will cost $1.5 billion.

The Iowa Economic Development Authority Board and Altoona’s City Council are expected to consider incentives for the project on Tuesday.

Tomi Engdahl says:

Penguin Computing to make Open Compute servers

And apparently a lot more money, thanks to Zuck & Co.

http://www.theregister.co.uk/2013/05/10/penguin_computing_open_compute_servers/

Linux server and cluster maker Penguin Computing is a member of the Open Compute Project started by Facebook to create open source data center gear, and now it is an official “solution provider”.

This means that Penguin now has OCP’s official blessing to make and sell integrated systems based on the motherboard and system designs that other OCP members cook up, and that it has met the manufacturing criteria to be able to put the OCP label on the machines.

Not everyone can use the stock Facebook designs, which come with their own custom Open Rack and power distribution and battery units, and which are tied very tightly to the custom data centers that Facebook has built. Some companies cannot easily ditch 19-inch racks and want some of the benefits of the “vanity free” OCP designs without having to gut their data center or build a new one. And that’s where companies like Penguin will come in, helping tweak OCP designs to fit into existing data centers.

“The world is changing, and coupled with some of the work we have done with ARM-based servers, we just want to be at the front end of these changes,” says Wuischpard – and the official designation by OCP means that Penguin can sell machines into Facebook itself.

“Our estimation is that OCP could be 40 per cent of our business within the next twelve months,” Wuischpard says, “if not more.”

That assumes that HP and Dell don’t get all gung-ho about Open Compute machinery. And if they see Penguin making money, they just might have no choice but to embrace open hardware, no matter how much they want to sell their own designs.

classes consultants says:

Its good as your other content : D, thanks for putting up. “Music is the soul of language.” by Max Heindel.

Tomi Engdahl says:

Facebook’s first data center DRENCHED by ACTUAL CLOUD

Revealed: Cloud downed by … cloud!

http://www.theregister.co.uk/2013/06/08/facebook_cloud_versus_cloud/

Facebook’s first data center ran into problems of a distinctly ironic nature when a literal cloud formed in the IT room and started to rain on servers.

Though Facebook has previously hinted at this via references to a “humidity event” within its first data center in Prineville, Oregon, the social network’s infrastructure king Jay Parikh told The Reg on Thursday that, for a few minutes in Summer, 2011, Facebook’s data center contained two clouds: one powered the social network, the other poured water on it.

Tomi Engdahl says:

Facebook turns on frigid Swedish ice-maidens in new data centre

And by maidens, we mean slim, ‘vanity-free’, low-maintenance models

http://www.theregister.co.uk/2013/06/13/facebook_swedish_datacenter/

If you Like this post, some of the processing that spams it all over your Facebook wall could now take place in Europe, thanks to the opening of Facebook’s new data center near the Arctic circle.

The social networking giant announced on Wednesday that its 900,000 square foot facility in Lulea, Sweden, is now “handling live traffic from around the world” – giving users lower latency when accessing the social network, and Swedish spooks the opportunity to hoover up all the data passing through Zuck & Co’s bit barn.

The Lulea, Sweden facility is expected to be one of the most efficient public data centers in the world thanks to its use of fresh air cooling and earth-friendly energy via use of power generated by hydroelectric dams.

Its opening also blows a chill wind for traditional IT suppliers, as it is a poster child for Facebook’s radical new approach to hardware design.

“Nearly all the technology in the facility, from the servers to the power distribution systems, is based on Open Compute Project designs,” the company beamed in a blog post on Wednesday.

Facebook says the power usage effectiveness (PUE) of the facility should be 1.07 – which means that for every watt spent on powering IT gear, .07 watts are spent on the supporting equipment that keeps the social networking humming.

This compares well with the rest of the IT industry, whose trailing PUE average tends to fall between 1.5 and 1.8, depending on who you ask.

Tomi Engdahl says:

Luleå goes live

https://www.facebook.com/notes/lule%C3%A5-data-center/lule%C3%A5-goes-live/474321655969861

On the edge of the Arctic Circle, where the River Lule meets the Gulf of Bothnia, lies a very important building. Facebook’s newest data center – in Luleå, Sweden – is now handling live traffic from around the world.

All the equipment inside is powered by locally generated hydro-electric energy. Not only is it 100% renewable, but the supply is also so reliable that we have been able to reduce the number of backup generators required at the site by more than 70 percent. In addition to harnessing the power of water, we are using the chilly Nordic air to cool the thousands of servers that store your photos, videos, comments, and Likes. Any excess heat that is produced is used to keep our office warm.

All this adds-up to a pretty impressive power usage efficiency (PUE) number. In early tests, Facebook’s Luleå data centre is averaging a PUE in the region of 1.07. As with our other data centres, we will soon be adding a real-time PUE monitor so everyone can see how we are performing on a minute-by-minute basis.

Tomi Engdahl says:

A Storm of Servers: How the Leap Second Led Facebook to Build DCIM Tools

http://www.datacenterknowledge.com/archives/2013/08/06/server-storm/

The company is developing its own DCIM software, based on insights gained during last year’s Leap Second bug.

For data centers filled with thousands of servers, it’s a nightmare scenario: a huge, sudden power spike as CPU usage soars on every server.

Last July 1, that scenario became real as the “Leap Second” bug caused many Linux servers to get stuck in a loop, endlessly checking the date and time. At the Internet’s busiest data centers, power usage almost instantly spiked by megawatts, stress-testing the facility’s power load and the user’s capacity planning.

The Leap Second “server storm” has prompted the company to develop new software for data center infrastructure management (DCIM) to provide a complete view of its infrastructure, spanning everything from the servers to the generators.

For Facebook, the incident also offered insights into the value of flexible power design in its data centers, which kept the status updates flowing as the company nearly maxed out its power capacity.

The Leap Second: What Happened

The leap second bug is a time-handling problem that is a distant relative of the Y2K date issue. A leap second is a one second adjustment that is occasionally applied to Universal Time (UTC) to account for variations in the speed of earth’s rotation. The 2012 Leap Second was observed at midnight on July 1.

A number of web sites immediately experienced problems, including Reddit, Gawker, Stumbleupon and LinkedIn. More significantly, air travel in Australia was disrupted as the IT systems for Qantas and Virgin Australia experienced difficulty handling the time adjustment.

What was happening? The additional second caused particular problems for Linux systems that use the Network Time Protocol (NTP) to synchronize their systems with atomic clocks. The leap second caused these systems to believe that time had “expired,” triggering a loop condition in which the system endlessly sought to check the date, spiking CPU usage and power draw.

As midnight arrived in the Eastern time zone, power usage spiked dramatically in Facebook’s data centers in Virginia, as tens of thousands of servers spun up, frantically trying to sort out the time and date. The Facebook web site stayed online, but the bug created some challenges for the data center team.

“We did lose some cabinets when row level breakers tripped due to high load,”

Facebook wasn’t the only one seeing a huge power surge. German web host Hetzner AG said its power usage spiked by 1 megawatt

DuPont Fabros builds and manages data centers that house many of the Internet’s premier brands, including Apple, Microsoft, Yahoo and Facebook. As the leap second bug triggered, the power usage surged within one of DFT’s huge data centers in Ashburn, Virginia.

“The building can take it, and that tenant knew it,” said Fateh. “We encourage tenants to go to 99 and 100 percent (of their power capacity). Some have gone over.”

Multi-tenant data centers like DFT’s contain multiple data halls, which provide customers with dedicated space for their IT gear. The ISO-parallel design employs a common bus to conduct electricity for the building, but also has a choke that can isolate a data hall experiencing electrical faults, protecting other users from power problems. But the system can also “borrow” spare capacity from other data halls

Facebook’s New Focus: DCIM

One outcome of the Leap Second is that Facebook is focused on making the best possible use of both its servers and its power – known in IT as “capacity utilization.” When Furlong looked at the spike in Facebook’s data center power usage, he saw opportunity as well as risk.

“Most of our machines aren’t at 100 percent of capacity, which is why the power usage went up dramatically,” said Furlong. “It meant we had done good, solid capacity planning. But it left us wondering if we were leaving something on the table in terms of utilization.”

The goal is to provide a seamless, real-time view of all elements of data center operations, allowing data center managers to quickly assess a range of factors that impact efficiency and capacity.

Tomi Engdahl says:

Facebook’s trillion-edge, Hadoop-based and open source graph-processing engine

http://gigaom.com/2013/08/14/facebooks-trillion-edge-hadoop-based-graph-processing-engine/

Facebook has detailed its extensive improvements to the open source Apache Giraph graph-processing platform. The project, which is built on top of Hadoop, can now process trillions of connections between people, places and things in minutes.

Internet.org « Tomi Engdahl’s ePanorama blog says:

[...] Internet.org is a global partnership between technology leaders, nonprofits, local communities and experts who are working together to bring the internet to the two thirds of the world’s population that doesn’t have it. Internet.org partners will explore solutions in three major opportunity areas: affordability, efficiency, and business models. The plans is to share tools, resources and best practices. The founding members of internet.org — Facebook, Ericsson, MediaTek, Nokia, Opera, Qualcomm and Samsung — will develop joint projects, share knowledge, and mobilize industry and governments to bring the world online. Internet.org is influenced by the successful Open Compute Project. [...]

Tomi Engdahl says:

Facebook Frankenphoto morgue will store cold, dead selfies FOREVER

Thought you’d escaped that hilarious hairdo from six years ago? Fat chance

http://www.theregister.co.uk/2013/09/24/facebook_on_the_rue_morgue/

The term “cold storage” has something of the morgue about it. Dead bodies in refrigerated cabinets, what an image. Facebook is backing the use of a photo morgue, the use of cold storage for old pix.

Old photos will have low individual access rates but when access is wanted it is wanted at faster speeds than a tape library could produce, as tape cartridges have to be robotically collected from their slot and transported to a drive, before being streamed to find the right photo.

The Facebook Open Compute Project has produced a cold storage specification (pdf) which states: “a cold storage server [is] a high capacity, low cost system for storing data that is accessed very infrequently … is designed as a bulk load fast archive.”

What’s the best technology for it? According to the Open Compute Project (OCP):

The typical use case is a series of sequential writes, but random reads. A Shingled Magnetic Recording* (SMR) HDD with spin-down capability is the most suitable and cost-effective technology for cold storage.

There are 16 Open Vault storage units per rack. Each unit is a 2U chassis with 30 x 3.5inch SATA 3Gbit/s hot-plug disk drives inside it

That’s 1.38 exabytes of raw capacity. Wow!

From the OCP, we learn: “To achieve low cost and high capacity, Shingled Magnetic Recording (SMR) hard disk drives are used in the cold storage system. This kind of HDD is extremely sensitive to vibration; so only 1 drive of the 15 on an Open Vault tray is able to spin at a given time.”

he cold storage spec states:

A HDD spin controller ensures that there is only one active disk in a tray.

• Power-on a specific HDD before accessing it. It may need to spin-up if has been recently spun-down.

• Power-off a specific HDD after it finishes access.

• Within an Open Vault system, power-on the two HDDs in the same slot on both trays.

This cold storage vault design is specific for an archive of data (photos) with relatively fast access needed.

Facebook’s picture morgue could be the death of mainstream array vendors’ cold storage supply hopes.

Tomi Engdahl says:

Inside the Arctic Circle, Where Your Facebook Data Lives

http://www.businessweek.com/articles/2013-10-03/facebooks-new-data-center-in-sweden-puts-the-heat-on-hardware-makers

Every year, computing giants including Hewlett-Packard (HPQ), Dell (DELL), and Cisco Systems (CSCO) sell north of $100 billion in hardware. That’s the total for the basic iron—servers, storage, and networking products. Add in specialized security, data analytics systems, and related software, and the figure gets much, much larger. So you can understand the concern these companies must feel as they watch Facebook (FB) publish more efficient equipment designs that directly threaten their business. For free.

The Dells and HPs of the world exist to sell and configure data-management gear to companies, or rent it out through cloud services. Facebook’s decision to publish its data center designs for anyone to copy could embolden others to bypass U.S. tech players and use low-cost vendors in Asia to supply and bolt together the systems they need.

Instead of buying server racks from the usual suspects, Facebook designs its own systems and outsources the manufacturing work. In April 2011, the social networking company began publishing its hardware blueprints as part of its so-called Open Compute Project, which lets other companies piggyback on the work of its engineers. The project now sits at the heart of the data center industry’s biggest shift in more than a decade. “There is this massive transition taking place toward what the new data center of tomorrow will look like,” says Peter Levine, a partner at venture capital firm Andreessen Horowitz. “We’re talking about hundreds of billions if not trillions of dollars being shifted from the incumbents to new players coming in with Facebook-like technology.”

The heart of Facebook’s experiment lies just south of the Arctic Circle, in the Swedish town of Luleå. In the middle of a forest at the edge of town, the company in June opened its latest megasized data center, a giant building that comprises thousands of rectangular metal panels and looks like a wayward spaceship. By all public measures, it’s the most energy-efficient computing facility ever built

The location has a lot to do with the system’s efficiency. Sweden has a vast supply of cheap, reliable power produced by its network of hydroelectric dams. Just as important, Facebook has engineered its data center to turn the frigid Swedish climate to its advantage. Instead of relying on enormous air-conditioning units and power systems to cool its tens of thousands of computers, Facebook allows the outside air to enter the building and wash over its servers, after the building’s filters clean it and misters adjust its humidity. Unlike a conventional, warehouse-style server farm, the whole structure functions as one big device.

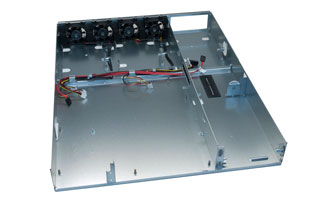

To simplify its servers, which are used mostly to create Web pages, Facebook’s engineers stripped away typical components such as extra memory slots and cables and protective plastic cases. The servers are basically slimmed-down, exposed motherboards that slide into a fridge-size rack. The engineers say this design means better airflow over each server. The systems also require less cooling, because with fewer components they can function at temperatures as high as 85F. (Most servers are expected to keel over at 75F.)

When Facebook started to outline its ideas, traditional data center experts were skeptical, especially of hotter-running servers. “People run their data centers at 60 or 65 degrees with 35-mile-per-hour wind gusts going through them,”

The company has no problem saying Facebook’s designs were a major impetus. “I think Open Compute made us get better,” says HP Vice President Paul Santeler. “It’s amazing to see what Facebook has done, but I think we’ve reacted pretty quickly.”

By contrast, Cisco downplays the threat posed by the project’s designs. Few companies will want to deal with buying such specialized systems that were designed primarily to fit the needs of consumer Web companies, says Cisco spokesman David McCulloch: “Big picture, this is not a trend we view as detrimental to Cisco.”

Dell created a special team six years ago to sell no-frills systems to consumer Web companies, and its revenue has grown by double digits every year since. “It sure doesn’t feel like we’re getting driven out of business,”

The custom hardware designed by Web giants such as Google (GOOG) and Amazon.com (AMZN) has remained closely guarded, but Facebook’s openness has raised interest in its data center models beyond Internet companies. Facebook has provided a road map for any company with enough time and money to build its own state-of-the-art data megafactory.

Wall Street has tried to push mainstream hardware makers toward simpler, cheaper systems for years, but its firms didn’t have enough purchasing clout

“We have tens of thousands of servers, while Google and Facebook have hundreds of thousands or millions of servers,”

“Facebook is getting us to these common components,”

Tomi Engdahl says:

Facebook hitches skirt, flashes ‘Cisco-slaying’ open network blade

OCP project heralds death of closed, bespoke gear in mega bit barns

http://www.theregister.co.uk/2013/11/12/facebook_cisco_killer_ocp_networking/

Facebook is leading a charge to displace traditional proprietary networking hardware and software in all of its data centers – potentially threatening the livelihood of large incumbents such as Cisco, Juniper, and Brocade in the wider market.

The Open Compute Project Networking scheme was announced by the social network at Interop in May. It involves Facebook banding together various industry participants to create a set of open specifications for networking gear that can be built, sold, and serviced by any company that likes the designs.

“When you go and buy a [networking] appliance you get speeds and feeds and ports that run some protocols and [a command interface] to manage protocols – the best you can do is system integration,” said Najam Ahmad, Facebook’s director of technical operations. “You couldn’t really effect change of the protocol.”

Asking a vendor to adjust networking equipment to suit an application is a lengthy process, he explained, and so Facebook’s scheme is trying to change that by opening up the underlying hardware.

The key way to do this is to sunder the links between networking hardware and software, and eliminate some of the gratuitous differentiation that makes gear from incumbents such as Brocade, Juniper, and Cisco hard to migrate between.

“These closed platforms don’t provide you that visibility or control to do that… that model is breaking, that model needs to break, networking is yet another distributed system,” Ahmad said.

“Any company whose business is solely networking, they typically don’t make money on huge sexy hard problems, they make it on meat of the market problems,” Rivers said. “They do it by creating a real or perceived lock-in to their technology. It might be a certification program. They do that because more often than not they have shareholders they are beholden to.”

Facebook is also skeptical about Cisco’s arguments that networking software needs to be tightly coupled to a Cisco-designed ASIC on a Cisco-designed circuit board to get greater performance.

“I don’t buy that,” Ahmad told

The modularity and choice of suppliers afforded by the OCP scheme should make it easier for people to rightsize their switch, while still having control over it, he said. “Custom is really hard for an established company,” Frankovsky said.

Tomi Engdahl says:

Facebook Likes Broadcom, Intel, Mellanox Switches

http://www.eetimes.com/document.asp?doc_id=1320052&

Broadcom, Intel, and Mellanox have developed competing specifications for datacenter switches, responding to a call from the Facebook-led Open Compute Project.

OCP called earlier this year for open specs for software-agnostic leaf and spine switches to complement its existing specs for streamlined servers. The specs aim to speed innovation in networking hardware, “help software-defined networking continue to evolve and flourish,” and give big datacenter operators more flexibility in how they create cloud computing systems, said Frank Frankovsky, a Facebook datacenter executive and chair of the OCP Foundation in a blog posted Monday.

The project’s goal is to deliver a switch that can be rebooted to handle different jobs as needed. So far the OCP group received more than 30 proposals for systems or components.

Intel posted online a full reference design for its proposal for a 48×4 10/40G switch

Mellanox proposed a switch based on its SwitchX-2 switch and an x86 processor running the Open Network Install Environment (ONIE) software from Cumulus Networks

Cumulus submitted to OCP its ONIE software

Tomi Engdahl says:

Facebook Says Its New Data Center Will Run Entirely on Wind

http://www.wired.com/wiredenterprise/2013/11/facebook-iowa-wind/

Facebook passed another milestone in the green data center arms race today with the announcement that its Altoona, Iowa data center will be 100 percent powered by wind power when it goes online in 2015.

This will Facebook’s second data center — after its Lulea, Sweden location — to run on all renewable power.

The electricity for the new data center will come from a nearby wind project in Wellsburg, Iowa, according to a blog post from Facebook. Both the wind project, which will be owned and operated by MidAmerican Energy, and the data center are currently under construction.

Green data centers have come a long way since environmental advocacy organization Greenpeace began railing against Facebook in 2010. Following criticism of their energy use patterns, companies like Facebook and Apple vowed to clean-up their acts.

But that’s not to say that these data centers are actually environmentally friendly as of yet. Reaching 100 percent renewable energy is tough to pull off. Apple claims that its data centers are powered by 100 percent renewable sources, but it’s using renewable energy credits to “offset” its use of coal and nuclear power.

But one of the biggest impacts of these sorts of projects is a boost in overall availability of renewable energy, says Greenpeace IT analyst Gary Cook

Tomi Engdahl says:

Ultra-green Europeans scorn Facebook’s data centre blueprint

Efficiency isn’t a dirty word! Crevice is a dirty word, but efficiency isn’t…

http://www.theregister.co.uk/2013/11/21/europeans_scorn_open_compute_facebook/

Facebook’s Open Compute Project has found little overt support in Europe to date, the firm’s data centre boss said today, in part because of those crazy continentals’ obsession with carbon neutrality over efficiency.

Since the project was launched in 2011, OCP groups had started spontaneously in Japan and Korea, he said, and the firm was looking to support them: “When it started it was just us… it has grown beyond our wildest dreams.”

However, he added, “We still don’t see anything in Europe that’s coalescing around OCP.”

Asked by The Register whether this was a problem or a puzzle for Facebook he said: “We would love to see more people support it.”

As to whether there was something intrinsic to the European mindset that stopped data centre operators and/or vendors jumping into bed with Facebook, he said, “I don’t think so.”

But, he added, Europe (as much as you can talk about a single Europe) was very focused on carbon neutrality. Facebook, and by extension OCP, is “more into the efficiency side of the house”.

He continued, “one of the best ways” to move towards carbon neutrality was to increase the efficiency of the data centre.

Typically its front-end servers run at 60 to 70 per cent utilisation.

To date, most announcements from OCP have been about its server and networking blueprints.

Data Center Energy Retrofits « Tomi Engdahl’s ePanorama blog says:

[...] According Pervilä study, large data centers such as Google in Hamina, Yahoo in Lockport and Facebook in Prineville resemble each other: they try to minimize the extra energy consumption , using the [...]

Tomi Engdahl says:

Microsoft contributes cloud server designs to the Open Compute Project

27 Jan 2014 3:20 PM

http://blogs.technet.com/b/microsoft_blog/archive/2014/01/27/microsoft-contributes-cloud-server-designs-to-the-open-compute-project.aspx

On Tuesday, I will deliver a keynote address to 3,000 attendees at the Open Compute Project (OCP) Summit in San Jose, Calif. where I will announce that Microsoft is joining the OCP, a community focused on engineering the most efficient hardware for cloud and high-scale computing via open collaboration. I will also announce that we are contributing to the OCP what we call the Microsoft cloud server specification: the designs for the most advanced server hardware in Microsoft datacenters delivering global cloud services like Windows Azure, Office 365, Bing and others. We are excited to participate in the OCP community and share our cloud innovation with the industry in order to foster more efficient datacenters and the adoption of cloud computing.

The Microsoft cloud server specification essentially provides the blueprints for the datacenter servers we have designed to deliver the world’s most diverse portfolio of cloud services. These servers are optimized for Windows Server software and built to handle the enormous availability, scalability and efficiency requirements of Windows Azure, our global cloud platform. They offer dramatic improvements over traditional enterprise server designs: up to 40 percent server cost savings, 15 percent power efficiency gains and 50 percent reduction in deployment and service times.

Microsoft and Facebook (the founder of OCP) are the only cloud service providers to publicly release these server specifications, and the depth of information Microsoft is sharing with OCP is unprecedented. As part of this effort, Microsoft Open Technologies Inc. is open sourcing the software code we created for the management of hardware operations, such as server diagnostics, power supply and fan control. We would like to help build an open source software community within OCP as well.

Tomi Engdahl says:

Microsoft Joins Open Compute Project, Will Share Server Designs

http://hardware.slashdot.org/story/14/01/28/1335224/microsoft-joins-open-compute-project-will-share-server-designs

Microsoft has joined the Open Compute Project and will be contributing specs and designs for the cloud servers that power Bing, Windows Azure and Office 365. “We came to the conclusion that sharing these hardware innovations will help us accelerate the growth of cloud computing,” said Kushagra Vaid, Microsoft’s General Manager of Cloud Server Engineering.

Tomi Engdahl says:

Facebook: Open Compute Has Saved Us $1.2 Billion

http://www.datacenterknowledge.com/archives/2014/01/28/facebook-open-compute-saved-us-1-2-billion/

Over the last three years, Facebook has saved more than $1.2 billion by using Open Compute designs to streamline its data centers and servers, the company said today. Those massive gains savings are the result of hundreds of small improvements in design, architecture and process, write large across hundreds of thousands of servers.

The savings go beyond the cost of hardware and data centers, Facebook CEO Mark Zuckerberg told

The $1.2 billion in savings serves as a validation of Facebook’s decision to customize its own data center infrastructure and build its own servers and storage. These refinements were first implemented in the company’s data center in Prineville, Oregon and have since been used in subsequent server farms Facebook has built in North Carolina, Sweden and Iowa.

“As you make these marginal gains, they compound dramatically over time,”

“You really should be looking at OCP,” said Parikh. “You could be saving a lot of money.”

Tomi Engdahl says:

Facebook puts 10,000 Blu-ray discs in low-power storage system

http://www.pcworld.com/article/2092420/facebook-puts-10000-bluray-discs-in-lowpower-storage-system.html

If you thought Netflix and iTunes would make optical discs a thing of the past, think again. Facebook has built a storage system from 10,000 Blu-ray discs that holds a petabyte of data and is highly energy-efficient, the company said Tuesday.

It designed the system to store data that hardly ever needs to be accessed, or for so-called “cold storage.” That includes duplicates of its users’ photos and videos that Facebook keeps for backup purposes, and which it essentially wants to file away and forget.

The Blu-ray system reduces costs by 50 percent and energy use by 80 percent compared with its current cold-storage system, which uses hard disk drives

Facebook cold storage efforts lead to petabyte Blu-ray system

http://www.slashgear.com/facebook-cold-storage-efforts-lead-to-petabyte-blu-ray-system-28314787/

The storage solution is a Blu-ray system that is presently in a prototype format, utilizing up to 10,000 Blu-ray discs that comprise up to one petabyte of data within a single cabinet setup. This is just the start for the social network, however, which is working towards boosting a per-cabinet storage capacity to five petabytes.

Tomi Engdahl says:

Microsoft Open Sources Its Internet Servers, Steps Into the Future

http://www.wired.com/wiredenterprise/2014/01/microsoft-open-compute-servers/

For nearly two years, tech insiders whispered that Microsoft was designing its own computer servers. Much like Google and Facebook and Amazon, the voices said, Microsoft was fashioning a cheaper and more efficient breed of server for use inside the massive data centers that drive its increasingly popular web services, including Bing, Windows Azure, and Office 365.

Microsoft will not only lift the veil from its secret server designs. It will ‘open source’ these designs, sharing them with the world at large.

As it released its server designs, the company also open sourced the software it built to manage the operation of these servers.

A company like Quanta is the main beneficiary of the Open Compute movement.

Thus, Dell and HP will sell machines based on Microsoft’s designs, just as they’ve backed similar designs from Facebook

This morning, Dell revealed it has also embraced the Project’s effort to overhaul the world’s network gear. Web giants such as Facebook are moving to towards “bare metal” networking switches

The Googles and the Facebooks and the Amazons started this movement. But when Microsoft and Dell get involved, it’s proof the rest of the world is following.

Tomi Engdahl says:

Facebook Attracts Server Startups

http://www.eetimes.com/document.asp?doc_id=1320785&

Servergy Inc. and Rex Computing debuted low power server architectures using Power and Adapteva processors, respectively, at the Facebook-led Open Compute Summit here. The two startups are the latest vendors leveraging the push for low power in big datacenters to disrupt Intel’s dominance in servers.

Servergy showed its 32-bit CT-1000 server using the eight-core Freescale P4080, and said it will roll a 64-bit system later this year using the Freescale P4240. Rex showed a prototype server using 32 ARM Cortex A9 cores embedded in Xilinx Zynq FPGAs working beside Adapteva Epiphany III chips as co-processors. It plans a 64-bit version using ARM Cortex A53 cores and Epiphany IV co-processors.

As many as a dozen companies are working on ARM server SoCs in hopes of gaining an edge over the Atom-based SoCs Intel is now shipping. Servergy and Rex both emerge from a sort of high tech left field with their options.

Tomi Engdahl says:

More and more data center today is filled with a small manufacturer of custom server, which has begun to show the traditional server manufacturers such as Dell and HP’s sales.

Research firm IDC’s figures, the global server market, net sales decreased 4.4 percent from one year ago to 14.2 billion dollars, or about 10.4 billion Euros. At the same time, the smaller the server manufacturers, sales were up 47.2 per cent from last year.

Small volume manufacturers such as Quanta and Inventec make servers such as Google and Facebook’s online giants who are planning to use for the servers themselves. Custom Server allows massive data center capacity is more convenient, and they are also cheaper.

Custom Server market seem seem to affect mostly sales of three largest server manufacturers: IBM, HP and Dell

Source: Tietoviikko

http://www.tietoviikko.fi/kaikki_uutiset/mittatilauspalvelimet+syovat+isojen+markkinoita/a971130

Tomi Engdahl says:

Masters of Their Own Destiny

Why today’s giants build the tech they need to stay on top.

http://www.fastcompany.com/3025636/technovore/masters-of-their-own-destiny

When Facebook was in its megagrowth phase a few years ago, it realized that the big server companies couldn’t make what it needed to serve up all those cute baby pictures and endless event requests. So Facebook set up a skunk-works project and designed its own servers specifically to make Facebook services zoom at the lowest possible cost. It sent the plans to an Asian server maker named Quanta to build these streamlined boxes cheaply.

Facebook didn’t have designs on the $55 billion server market. It took matters in its own hands and ended up creating a competitor that Dell, HP, and IBM didn’t see coming. Facebook, thanks to its sheer size and complexity, is the standard-bearer for the data-rich, highly networked future of information. When it designs machines to handle its workloads, it’s creating the next-generation server. The big-hardware makers let the tail wag the dog.

Tomi Engdahl says:

Inside Facebook’s engineering labs: Hardware heaven, HP hell – PICTURES

Better duck, Amazon… Hardware drone incoming

http://www.theregister.co.uk/2014/03/05/facebook_lab_tour/

10 years of Facebook Facebook’s hardware development lab is either a paradise, a business opportunity, or a hell, depending on your viewpoint.

If you’re a hardware nerd who loves fiddling with data centre gear, ripping out extraneous fluff, and generally cutting the cost of your infrastructure, then the lab is a wonderful place where your dreams are manufactured.

If you’re an executive from HP, Dell, Lenovo, Cisco, Brocade, Juniper, EMC or NetApp, the lab is likely to instill a sense of cold, clammy fear, for in this lab a small team of diligent Facebook employees are working to make servers and storage arrays –and now networking switches – which undercut your own products.

Tomi Engdahl says:

Facebook’s Open Compute guru Frank Frankovsky leaves to build optical storage startup

http://gigaom.com/2014/03/25/facebooks-open-compute-guru-frank-frankovsky-leaves-to-build-optical-storage-startup/

Frank Frankovsky, one of the men responsible for Facebook’s foray into building hardware, has left the social networking giant to form his own startup.

Tomi Engdahl says:

Hot naked Asian racks in Cali: El Reg snaps Open Compute servers for all

Facebook’s system designs roll off assembly lines for world+dog

29 Jan 2014

http://www.theregister.co.uk/2014/01/29/facebook_ocp_gallery/

Manufacturers in Asia are taking Facebook’s Open Compute server blueprints, tweaking the designs, and selling the manufactured kit to the masses, The Register has learned.

Here at the Open Compute Project (OCP) Summit in San Jose, California, Facebook has spent two days talking up the benefits of its customizable, low-cost liberally licensed data-center computers.

Though the Open Compute Project is nearly three years old, there are not yet many companies using the designs at scale, aside from a few favored reference firms like Rackspace, Bloomberg, and Goldman Sachs. But judging from the show-floor, canny companies are gearing up to sell the kit on the expectation of greater demand in the future.