Venerable Ethernet provides the backbone of the internet. Ethernet has risen to complete dominance for local area networks over its forty years of existence. The first Ethernet experimentals versions started in 1972 (patented 1978). The commercialization of Ethernet started in 1980′s.

At first Ethernet technology remained primarily focused on connecting up systems within a single facility, rather than being called upon for the links between facilities, or the wider Internet. Ethernet at short distances has primarily used copper wiring. Fiber optic connections have also been available for the transmission of Ethernet over considerable distances for nearly two decades.

Today, Ethernet is everywhere. It’s evolved from a 2.93-Mb/s and then 10-Mb/s coax-based technology to one that offers multiple standards using unshielded twisted pair (UTP) and fiber-optic cable with speeds over 100 Gb/s. Ethernet, standardized by the IEEE as “802.3,” now dominates the networking world. And the quest for more variations continues onward.

Ethernet is on the Way to Total Networking Domination. Ethernet is all over the place. Most of us use it day by day. The first coax-primarily based Ethernet LAN conceived in 1973 by Bob Metcalfe and David Boggs. Since then Ethernet has grown to the stage of pretty much entire local area networking goes through it (even most WiFi hot spots are wired with Ethernet). It’s hard to overestimate the importance of Ethernet to networking over the past 25 years, during which we have seen seen Ethernet come to dominate the Networking industry. Ethernet’s future could be even more golden than its past.

Ethernet has pretty much covered office networking and backbones industrial networks. In industrial applications there applications where Ethernet is still coming. Ethernet is on the Way to Total Networking Domination article says that single-pair Ethernet makes possible cloud-to-sensor connections that enable full TCP/IP, and it’s revolutionizing factory automation. This technology will expand the use of Ethernet on the industrial applications. Several different single-pair Ethernet (SPE) standards seeks to wrap up full networking coverage by addressing the Internet of Things (IoT), and specifically the industrial Internet of Things (IIoT) and Industry 4.0 application space.

Traditional copper Ethernet

Started with 50 ohms coax in 1973. In the 1990′s the twisted pair Ethernet with RJ-45 connectors and fiber became the dominant media choices. Modern Ethernet versions use a variety of cable types.

Twisted pair Ethernet mainstream use stared with CAT 5 cable that has been long time used for 100 Mbps or slower plans, while CAT 5a cables and newer are ideal for faster speeds. Both CAT5e and CAT6 can handle speeds of up to 1000 Mbps, or a Gigabit per second.

Today, the most common copper cables are Cat5e, Cat6, and Cat6a. Now twisted pair the main media (CAT 6A, CAT7, CAT8 etc.) can handle speeds from 10M to 10G with mainstream devices, typically up to 100 meters. All those physical layers require a balanced twisted pair with an impedance of 100 Ω. Standard CAT cable has four wire pairs, and different Ethernet standards use two of them (10BASE-T, 100BASE-TX) or all four pairs (1000BASE-T and faster).

Many different modes of operations (10BASE-T half-duplex, 10BASE-T full-duplex, 100BASE-TX half-duplex, etc.) exist for Ethernet over twisted pair, and most network adapters are capable of different modes of operation. Autonegotiation is required in order to make a working 1000BASE-T connection. Ethernet over twisted-pair standards up through Gigabit Ethernet define both full-duplex and half-duplex communication. However, half-duplex operation for gigabit speed is not supported by any existing hardware.

In the past, enterprise networks used 1000BASE-T Ethernet at the access layer for 1 Gb/s connectivity over typically Cat5e or Cat6 cables. But the advent of Wi-Fi 6 (IEEE 802.11ax) wireless access points has triggered a dire need for faster uplink rates between those access points and wiring closet switches preferably using existing Cat5e or Cat6 cables. As a result, the IEEE specified a new transceiver technology under the auspices of the 802.3bz standard, which addresses these needs. The industry adopted the nickname “mGig,” or multi-Gigabit, to designate those physical-layer (PHY) devices that conform to 802.3bz (capable of 2.5 Gb/s and 5 Gb/s) and 802.3an (10 Gb/s). mGig transceivers fill a growing requirement for higher-speed networking using incumbent unshielded twisted-pair copper cabling. The proliferation of mGig transceivers, which provide Ethernet connectivity with data rates beyond 1 Gb/s over unshielded copper wires, has brought with it a new danger: interference from radio-frequency emitters that can distort and degrade data-transmission fidelity.

CAT8 can go even higher speeds up to 40G up to 30 meters. Category 8, or just Cat8, is the latest IEEE standard in copper Ethernet cable. Cat8 is the fastest Ethernet cable yet. Cat8 support of bandwidth up to 2 GHz (four times more than standard Cat6a bandwidth) and data transfer speed of up to 40 Gbps. Cat 8 cable is built using a shielded or shielded twisted pair (STP) construction where each of the wire pairs is separately shielded. Shielded foil twisted pair (S/FTP) construction includes shielding around each pair of wires within the cable to reduce near-end crosstalk (NEXT) and braiding around the group of pairs to minimize EMI/RFI line noise in crowded network installations. Cat 8 Ethernet cable is ideal for switch to switch communications in data centers and server rooms, where 25GBase‑T and 40GBase‑T networks are common. Its RJ45 ends will connect standard network equipment like switches and routers. Cat8 cable supports Power over Ethernet (PoE) technology. Cat8 is designed for a maximum range of 98 ft (30 m). If you want fater speeds and/or long distance there are various fiber interfaces.

Power over Ethernet

Power over Ethernet (PoE) offers convenience, flexibility, and enhanced management capabilities by enabling power to be delivered over the same CAT5 or higher capacity cabling as data. PoE technology is especially useful for powering IP telephones, wireless LAN access points, cameras with pan tilt and zoom (PTZ), remote Ethernet switches, embedded computers, thin clients and LCDs.

The original IEEE 802.3af-2003 PoE standard provides up to 15.4 W of DC power (minimum 44 V DC and 350 mA) supplied to each device. The IEEE standard for PoE requires Category 5 cable or higher (can operate with category 3 cable for low power levels). The updated IEEE 802.3at-2009 PoE standard also known as PoE+ or PoE plus, provides up to 25.5 W (30W) of power.

IEEE 802.3bt is the 100W Power over Ethernet (PoE) standard. IEEE 802.3bt calls for two power variants: Type 3 (60W) and Type 4 (100W). This means that you can now carry close to 100W of electricity over a single cable to power devices. IEEE 802.3bt takes advantage of all four pairs in a 4-pair cable, spreading current flow out among them. Power is transmitted along with data, and is compatible with data rates of up to 10GBASE-T.

Advocates of PoE expect PoE to become a global long term DC power cabling standard and replace a multiplicity of individual AC adapters, which cannot be easily centrally managed. Critics of this approach argue that PoE is inherently less efficient than AC power due to the lower voltage, and this is made worse by the thin conductors of Ethernet.

Cat5e cables usually run between 24 and 26 AWG, while Cat6, and Cat6A usually run between 22 and 26 AWG. When shopping for Cat5e, Cat6, or Cat6a network cables, you might notice an AWG description printed on the cable jacket such as: 28AWG, 26 AWG, or 24AWG. AWG stands for American wire gauge, a system for defining the diameter of the conductors of a wire which makes up a cable. The larger the wire gauge number, the thinner the wire and the smaller the diameter.

One of the newest types of Ethernet cables on the market, Slim Run Patch Cables, actually have a 28 AWG wire. This allows these patch cords to be at least 25% smaller in diameter, than standard Cat5e, Cat6, and Cat6a Ethernet. Smaller cable diameter is beneficial for high-density networks and data centers.

The downside of 28 AWG cable is higher resistance and power loss in PoE applications. Before February 2019, the short answer to “Can 28 AWG patch cords be used in PoE applications?” was “no.” Today, however, the answer is “yes”! 28 AWG patch cords can now be used to support power delivery.

It has been approved that 28 AWG cables can support power delivery and higher PoE levels with enough airflow around the cable. According to TSB-184-A-1, an addendum to TSB-184-A: 28 AWG patch cabling can support today’s higher PoE levels, up to 60W.

To maintain recommendations for temperature rise, 28 AWG cables must be grouped into small bundles. By keeping 28 AWG PoE patch cords in bundles of 12 or less, the impacts of cable temperature rise are diminished thus allowing you to stay within the suggested maximum temperature rise of 15 degrees Celsius. Per TSB-184-A-1, an addendum to TSB-184-A: 28 AWG in bundles of up to 12 can be used for PoE applications up to 30W.In PoE applications using between 30W and 60W of power, spacing of 1.5 inches between bundles of 12 cables is recommended. Anything above 60W with 28 AWG cable requires authorization from the authority in USA.

Single pair Ethernet

Several different single-pair Ethernet (SPE) standards seeks to wrap up full networking coverage by addressing the Internet of Things (IoT), and specifically the industrial Internet of Things (IIoT) and Industry 4.0 application space.

Single Pair Ethernet, or SPE, is the use of two copper wires that can transmit data at speeds of up to 1 Gb/s over short distances. In addition to data transfer, SPE has option to simultaneously delivering Power over Dataline (PoDl). This could be a major step forward in factory automation, building automation, the rise of smart cars, and railways. Single-pair Ethernet (SPE) allows legacy industrial networks to migrate to Ethernet network technology whilst delivering power and data to and from edge devices.

Traditional computer-oriented Ethernet comes normally in two and four-pair variants. Different variants of Ethernet are the most common industrial link protocols. Until now, they have required 4 or 8 wires, but the SPE link is allows using only two wire pairs. Using only two wire pairs can simplify the wiring and can allow reusing some old industrial wiring for Ethernet application. SPE offers additional benefits such as lighter and more flexible cables. Their space requirements and assembly costs are lower than with traditional Ethernet wiring. Those are the reasons why the technology is of interest to many.

The 10BASE-T1, 100BASE-T1 and 1000BASE-T1 single-pair Ethernet physical layers are intended for industrial and automotive applications or as optional data channels in other interconnect applications.

Automotive Ethernet, called 802.3bw or 100BASE-T1, that adapts Ethernet to the hostile automotive environment with a single pair.

There is also a long distance 10BASE-T1L standard that can support distance up to one one kilometer at 10-Mb/s speed. In building automation, long reach is often needed for HVAC, fire safety, and equipment like elevators. The 10BASE-T1 standard has two parts. The main offering is 10BASE-T1L, or long reach to 1 km. The connection is point-to-point (p2p) with full-duplex capability. The other is 10BASE-T1S, or short-reach option that provides p2p half-duplex coverage to 25 meters and includes multidrop possibilities.

All those physical layers require a balanced twisted pair with an impedance of 100 Ω. The cable must be capable of transmitting 600 MHz for 1000BASE-T1 and 66 MHz for 100BASE-T1.

Single-pair Ethernet defines its own connectors:

- IEC 63171-1 “LC”: This is a 2-pin connector with a similar locking tab to the modular connector, if thicker.

- IEC 63171-6 “industrial”: This standard defines 5 2-pin connectors that differ in their locking mechanisms and one 4-pin connector with dedicated pins for power. The locking mechanisms range from a metal locking tab to M8 and M12 connectors with screw or push-pull locking. The 4-pin connector is only defined with M8 screw locking.

Fiber Ethernet

When most people think of an Ethernet cable, they probably imagine a copper cable, and that’s because they’ve been around the longest. A more modern take on the Ethernet cable is fiber optic. Instead of depending on electrical currents, fiber optic cables send signals using beams of light, which is much faster. In fact, fiber optic cables can support modern 10Gbps networks with ease. Fiber optic has been option on Ethernet for a long time. Ethernet has been using optical fiber for decades. The first standard was 10 Mbit/s FOIRL in 1987. The currently fastest PHYs run 400 Gbit/s. 800 Gbit/s and 1.6 Tbit/s started development in 2021.

The advantage most often cited for fiber optic cabling – and for very good reason – is bandwidth. Fiber optic Ethernets can easily handle the demands of today’s advanced 10 Gbps networks or 100Gbps, and have the capability of doing much more. Fiber optic cables can run without significant signal loss for distances, from kilometers up to tens of kilometers depending on the fiber type and equipment used.

The cost of fiber has come down drastically in recent years. Fiber optic cable is usually still a little more expensive than copper, but when you factor in everything else involved in installing a network the prices are roughly comparable.

Fiber optics is immune to the electrical interference problems because fiber optic cable doesn’t carry electricity, it carries light. Because fiber optic cables don’t depend on electricity, they’re less susceptible to interference from other devices.

Fiber optic cables are sometimes advertised to be more secure than copper cables because light signals are more difficult to hack. It is true that light signals are slightly more difficult to hack than copper cable signals, but actually they are nowadays well hackable with right tools.

Fiber has won the battle in the backbone networks on long connections, but there is still place for copper on shorter distances Nearly every computer and laptop sold today has a NIC card with a built-in port ready to accept a UTP copper cable, while a potentially expensive converter or fiber card is required to make a fiber optic cable connection.

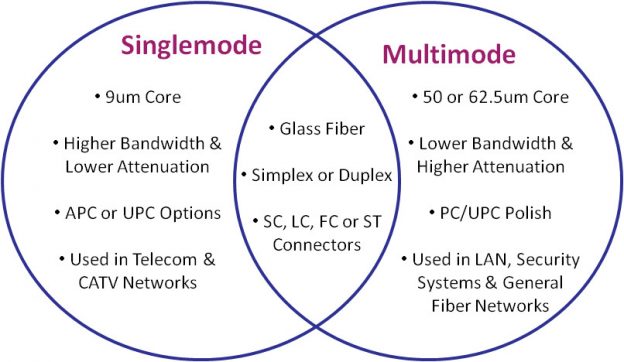

Standard fiber-optic cables have a glass quartz core and cladding. Nowadays, there are two fiber optic cable types widely adopted in the field of data transfer—single mode fiber optic cable and multimode fiber optic cable.

A single-mode optical fiber is a fiber that has a small core, and only allows one mode of light to propagate at a time. So it is generally adapted to high speed, long-distance applications. The core size of single mode fiber has a core diameter between 8 and 10.5 micrometers with and a cladding diameter of 125 micrometers. OS1 and OS2 are standard single-mode optical fiber used with wavelengths 1310 nm and 1550 nm (size 9/125 µm) with a maximum attenuation of 1 dB/km (OS1) and 0.4 dB/km (OS2). SMF is used in long-haul applications with transmission distances of up to 100 km without need a repeater. Typical transmission distances are up 10-40 kilometers. Single mode capable hardware used to be very expensive years ago, but prices for then have came down quicly. Nowadays single mode is very commonly uses.

Multimode optical fiber is a type of optical fiber with a larger core diameter larger (65 or 50 micrometer core) designed to carry multiple light rays, or modes at the same time. It is mostly used for communication over short distances. Multimode Fiber (MMF) uses a core/cladding diameter of typically 50 micrometer/125 mictometer, providing less reach, up to approximately 2 km or less, due to increased dispersion as a result of the larger diameter core.

Depending on your requirements, when going to use fiber, you’re probably first looking for one of these commonly used interfaces: 1000BASE-SX (1 Gbit/s over up to 550 m of OM2 multi-mode fiber), 1000BASE-LX (1 Gbit/s over up to 10 km of single-mode fiber), 10GBASE-SR (10 Gbit/s over up to 400 m of OM4 MMF), 10GBASE-LR (10 Gbit/s over up to 10 km of SMF).

There are many other PHY standards for various data rates and distances, also many common non-standards for even longer distance. The required optical transceivers are usually SFP (1G) or SFP+ modules (10G) plugged into your network hardware. Switches and network adapters with SFP modules allow you to create custom fiber optic high-speed Ethernet networks by plugging in suitable type SFP module. External media converters for devices without SFP slot are also available. A fiber media converter, also known as a fiber to Ethernet converter, allows you to convert typical copper Ethernet cable (e.g., Cat 6a) to fiber and back again.

100G (100 Gb/s) Ethernet has had a good run as the backbone technology behind the cloud, but the industry is moving on. There is expected to be exploding demand for bandwidth in the 5G/mmWave era that’s now upon us. Modern

400G and 800G test platforms validate the cloud’s Ethernet backbone, ensuring support for the massive capacity demands of today and tomorrow. Many service providers and data centers, for various reasons, skipped over 400G Ethernet implementations and are looking toward 800G Ethernet for their next network transport overhaul. Adoption of 400G is still happening, but the growth of 800G will eclipse it before long.

In the future, the speeds of Ethernet networks will increase. Even after the 25, 40, 50 and 100 gigabit versions, more momentum is needed. For example, growing traffic in data centers would require 100G or even faster connections over long distances. Regardless of future speed needs, 200G or 400G speeds are best suited for short-term needs. The large cloud data centers on the Internet have moved to 100GbE, 200GbE and 400G solutions for trunk connections. They also require strong encryption and, in addition, accurate time synchronization of the backbone networks of 5G networks. Media Access Control security (MACsec) provides point-to-point security on Ethernet links. MACsec is defined by IEEE standard 802.1AE. IEEE 802.1X is an IEEE Standard for port-based Network Access Control (PNAC).

High-speed terabit rates do not yet make sense to implement now. The standardization organization OIF (Optical Interworking Forum) has worked up to 800 gigabit connection speeds. The Ethernet Technology Consortium proposed an 800 Gbit/s Ethernet PCS variant based on tightly bundled 400GBASE-R in April 2020. In December 2021, IEEE started the P802.3df Task Force to define variants for 800 and 1600 Gbit/s over twinaxial copper, electrical backplanes, single-mode and multi-mode optical fiber along with new 200 and 400 Gbit/s variants using 100 and 200 Gbit/s lanes. Lightwave magazine expects that 800G Ethernet transceivers become most popular module in mega data centers by 2025. There are already test instruments designed to validate 1.6T designs.

There is also one fiber tpye I have not mentioned yet. Plastic optical fiber (POF) has emerged as a low cost alternative to twisted pair copper cabling and coaxial cables in office, home and automotive networks. POF technology offers an attractive alternative to traditional glass optical fiber as well as copper for industrial, office, home and automotive networks. POF typically utilizes a polymethylmethacrylate (PMMA) core and a fluoropolymer cladding. Glass fiber-optic cable offers lower attenuation than its plastic counterpart, but POF provides a more rugged cable, capable of withstanding a tighter bend radius.

POF has generally been utilized in more niche applications where its advantages outweigh the need for high bandwidth and relatively short maximum distance (only tens of meters). Currently in the market, several manufacturers have developed fiber optic transceivers for 100Mbps Ethernet over plastic optical fiber and there exist also 1Gbit/s versions. Advances in LED technology and Vertical Cavity Surface Emitting Laser (VCSEL) technology are enabling POF to support data rates of 3Gbps and above

POF offers many benefits to the user: it is lightweight, robust, cheap and easy to install; the use of 650nm red LED light makes it completely safe and easier to diagnose as red light can be seen by the human eye. There are several different connectors used for PoF. There is also connector-less option called Optolock, where you can simply slice the plastic fiber with a knife, separate the fibers, insert the fiber into the housing and then lock it in place.

Industrial networks special demands for Ethernet

Industrial networks need to be durable. Industrial applications on the field needs often more durable connectors than the traditional RJ-45 connector of office networks.

Here is a list of some commonly used industrial Ethernet connector types:

- 8P8C modular connector: For stationary uses in controlled environments, from homes to datacenters, this is the dominant connector. Its fragile locking tab otherwise limits its suitability and durability. Bandwidths supporting up to Cat 8 cabling are defined for this connector format.

- M12X: This is the M12 connector designated for Ethernet, standardized as IEC 61076-2-109. It is a 12mm metal screw that houses 4 shielded pairs of pins. Nominal bandwidth is 500MHz (Cat 6A). The connector family is used in chemically and mechanically harsh environments such as factory automation and transportation. Its size is similar to the modular connector.

- ix Industrial: This connector is designed to be small yet strong. It has 10 pins and a different locking mechanism than the modular connector. Standardized as IEC 61076-3-124, its nominal bandwidth is 500MHz (Cat 6A).

In addition to those there are applications that use other versions of M12 and smaller M8 connectors.

Current industrial trends like Industrie 4.0 and the Industrial Internet of Things lead to an increase in network traffic in ever-growing converged networks. Many industrial applications need reliable and low latency communications. Many industries require deterministic Ethernet, and Industrial Automation is one of them. The automation industry has continuously sought solutions to achieve fast, deterministic, and robust communication. Currently, several specialized solutions are available for this purpose, such as PROFINET IRT, Sercos III, and Varan. TSN can help standardize real-time Ethernet across the industry.

TSN refers to a set of IEEE 802 standards that make Ethernet deterministic by default. TSN is an upcoming new technology that sits on Layer 2 of the ISO/OSI Model. It adds definitions to guarantee determinism and throughput in Ethernet networks. It will provide standardized mechanisms for the concurrent use of deterministic and non-deterministic communication. AVB/TSN can handle rate-constrained traffic, where each stream has a bandwidth limit defined by minimum inter-frame intervals and maximal frame size, and time-trigger traffic with an exact accurate time to be sent. Low-priority traffic is passed on best-effort base, with no timing and delivery guarantees. Time-sensitive traffic has several priority classes.

Time-Sensitive Networking (TSN) is a set of standards under development by the Time-Sensitive Networking task group of the IEEE 802.1 working group. The majority of projects define extensions to the IEEE 802.1Q – Bridges and Bridged Networks, which describes Virtual LANs and network switches. These extensions in particular address the transmission of very low transmission latency and high availability. Applications include converged networks with real-time Audio/Video Streaming and real-time control streams which are used in automotive or industrial control facilities.

In contrast to standard Ethernet according to IEEE 802.3 and Ethernet bridging according to IEEE 802.1Q, time is very important in TSN networks. For real-time communication with hard, non-negotiable time boundaries for end-to-end transmission latencies, all devices in this network need to have a common time reference and therefore, need to synchronize their clocks among each other. This is not only true for the end devices of a communication stream, such as an industrial controller and a manufacturing robot, but also true for network components, such as Ethernet switches. Only through synchronized clocks, it is possible for all network devices to operate in unison and execute the required operation at exactly the required point in time. Scheduling and traffic shaping allows for the coexistence of different traffic classes with different priorities on the same network.

The following are some of the IEEE standards that make up TSN:

Enhanced synchronization behavior (IEEE 802.1AS)

Suspending (preemption) of long frames (IEEE 802.1-2018)

Enhancements for scheduled traffic (IEEE 802.1Q-2018)

Path control and bandwidth reservation (IEEE 802.1Q-2018)

Seamless redundancy (IEEE 802.1CB)

Stream reservation (IEEE 802.1Q-2018)

Synchronization of clocks across the network is standardized in Time-Sensitive Networking (TSN). Time in TSN networks is usually distributed from one central time source directly through the network itself using the IEEE 1588 Precision Time Protocol, which utilizes Ethernet frames to distribute time synchronization information.

259 Comments

Tomi Engdahl says:

Dedicated Network Partners

https://www.dnwpartners.com/

Tomi Engdahl says:

https://www.howtogeek.com/813419/how-long-can-an-ethernet-cable-be/

Tomi Engdahl says:

Answering Open Questions in the Race to 800G

July 14, 2022

Operators of large-scale data centers are clamoring for 800G optics, but is the technology ready for prime time? Learn how vendors are navigating uncertainty in the drive to meet market demand.

https://www.mwrf.com/technologies/systems/article/21246566/spirent-communications-answering-open-questions-in-the-race-to-800g?utm_source=RF+MWRF+Today&utm_medium=email&utm_campaign=CPS220715065&o_eid=7211D2691390C9R&rdx.identpull=omeda|7211D2691390C9R&oly_enc_id=7211D2691390C9R

What you’ll learn:

Why the shift to 800G presents unique challenges compared to previous Ethernet evolutions.

The biggest hurdles vendors must clear to bring 800G solutions to customers.

How different stakeholders are testing and validating 800G components to meet market demand.

When building a house, any of a thousand decisions could lead to budget overruns, blown timelines, and big problems down the road. Fortunately, it’s easy to get answers to most questions that arise: check the blueprints. But what if the blueprints are still being drafted even as construction gets underway?

Thankfully, no one builds houses that way. But such a scenario isn’t far off from what vendors must contend with right now in the race to deliver 800-Gigabit Ethernet (800G).

The last big shift in data-center optics, moving from 100G to 400G, progressed over many years, leaving plenty of time for standards bodies to formalize the technology and for vendors to implement it.

This time around, we don’t have that luxury. With exploding demand for cloud services, operators of the world’s biggest data centers need higher-speed transmission technologies now, not in two or three years.

Vendors in this space—chipset makers, network equipment manufacturers (NEMs), and cable and transceiver vendors—are racing to meet the need as quickly as possible. But with standards still incomplete and open to interpretation, delivering production-ready 800G solutions is far from simple.

Let’s review the biggest outstanding questions facing vendors bringing new 800G components to market and the steps they’re taking to find answers.

As vendors push forward with 800G solutions, they’re working through such open questions as:

Where are 800G standards going, and what will they ultimately look like?

The most immediate issue facing vendors today is the lack of a mature standard—and, in fact, they’re staring at a scenario with two competing standards in different stages of development. In past technology evolutions, IEEE has served as a kind of lodestar for the industry.

However, while IEEE specifies the 112G electrical lanes referenced above in 802.3CK, they haven’t yet completed their 800G standard. In the meantime, vendors seeking to deliver early solutions are using the only 800G standard that exists today, the Ethernet Technology Consortium’s (ETC) 800GBASE-R.

How will these competing standards resolve? Should vendors expect a repeat of the Betamax/VHS wars of the 1980s, where one standard ultimately dominated the market? Should they invest in supporting both? What kind of market momentum will they lose if they wait for clear answers? Whichever way vendors go represents a significant bet, with major long-term implications.

How will different vendor devices interact?

Working with multiple standards in different stages of development also means that early components may not necessarily support the same electrical transmission capabilities, even for core functions like establishing a link. For example, some 800GBASE-R ASICs that support both Auto-Negotiation (AN) and Link Training (LT) are currently incompatible with ASICs that support only LT.

Therefore, even when all components comply with the ETC standard, customers can’t assume that links will automatically be established when using different devices and cables. They may need to manually tune transmit settings. This increases the potential for link flaps, a condition in which links alternate between up and down states, which can dramatically affect throughput.

Which issues that had negligible impact in 400G will now become big problems?

Jumping from 400G to 800G optics means more than a massive speed increase. We’re also doubling the spectrum to use higher frequencies, as well as doubling the sample speed and symbol rate. Suddenly, issues and inefficiencies we didn’t have to worry about in 400G can seriously diminish electrical performance.

One big problem we already know we need to solve: the huge amount of heat generated by 800G optics. Navigating this issue affects everything from the materials used for ASICs to pin spacing, and vendors are still working through all of the implications.

Unleashing Tomorrow’s High-Performance Ethernet

Those are just some of the questions vendors have to wrestle with as they race to get 800G components into the hands of customers. The only way to get answers is to perform exhaustive testing at all layers of the protocol stack.

Right now, vendors and their cloud-provider customers are hard at work testing and validating emerging 800G technologies. Given the unsettled standards space and the many aspects of the technology still in flux, these efforts run the gamut—even when focusing exclusively on products using the ETC standard.

Network equipment manufacturers (NEMs) are working to assure link and application performance under demanding live network conditions. Chipset makers are employing the latest techniques—Layer-1 silicon emulation, software-based traffic emulation, and automated testing workflows—for pre-silicon validation and post-silicon testing. Cable and transceiver vendors are performing exhaustive interoperability testing to validate link establishment and line-rate transmission in multivendor environments. And, as quickly as they can get 800G components, hyperscalers are launching their own extensive testing efforts to baseline network and application performance.

Tomi Engdahl says:

Reaaliaikainen ethernet tulee mikro-ohjaimille

https://etn.fi/index.php/13-news/13859-reaaliaikainen-ethernet-tulee-mikro-ohjaimille

Monissa teollisuuden ohjaussovelluksissa tarvitaan reaaliaikaista ohjausta. Usein tämä tapahtuu ethernetin ja sen päällä toimivien eri protokollien avulla. Renesas on nyt esitellyt ohjainpiirien perheen, joka tukee käytetyimpiä teollisuuden verkkoprotokollia.

RZ/N2L-mikroprosessorien avulla verkkotoimintojen lisääminen teollisuuslaitteisiin on helppoa, Renesas kehuu. RZ/N2L-ohjaimet tukevat monia alan standardispesifikaatioita ja protokollia helpottaakseen teollisuuden automaatiolaitteiden kehittämistä, jotka vaativat reaaliaikaisia ominaisuuksia.

Ethernet-sateenvarjon alla reaaliaikaisuus tarkoittaa yhä suositumpaa TSN-standardia eli aikakriittistä verkottamista (Time-Sensitive Networking). Integroidulla TSN-yhteensopivalla 3-porttisella Gigabit Ethernet -kytkimellä ja EtherCAT-ohjaimella varustetut Renesasin uudet ohjaimet tukevat myös kaikkia tärkeimpiä teollisuuden verkkoviestintäprotokollia, kuten EtherCAT, PROFINET RT, EtherNet/IP ja OPC UA, sekä uusi PROFINET IRT.

Tomi Engdahl says:

TIACC: A Collaboration to Accelerate Coexistence

Aug. 10, 2022

Time-sensitive networking enables industrial and non-industrial protocols to run on the same physical wire, enabling IT and OT systems to work together. TIACC brings competitors together to validate these TSN solutions for better interoperability.

https://www.electronicdesign.com/industrial-automation/article/21248445/cclink-partner-association-tiacc-a-collaboration-to-accelerate-coexistence?utm_source=EG+ED+Connected+Solutions&utm_medium=email&utm_campaign=CPS220809161&o_eid=7211D2691390C9R&rdx.identpull=omeda|7211D2691390C9R&oly_enc_id=7211D2691390C9R

What you’ll learn:

The TIACC partnership’s role in collaboration for industrial networking.

The four primary benefits of time-sensitive networking.

The value of having different networks and devices coexisting on the same physical wire managed with simple system-management tools.

TIACC (TSN Industrial Automation Conformance Collaboration) is a unique and important initiative that clearly demonstrates the value proposition of Ethernet time-sensitive networking (TSN) technology through the participation of competing organizations.

The leading industrial Ethernet organizations—CC-Link Partner Association, ODVA, OPC Foundation, PROFINET International, and AVNU—are working together to make sure that the solutions from their respective organizations are validated and tested together so that end customers may make use of a blended TSN architecture. The organization is focused on developing the necessary test specifications to ensure the coexistence of industry protocols.

https://www.tiacc.net/

Tomi Engdahl says:

https://hackaday.com/2022/08/18/bufferbloat-the-internet-and-how-to-fix-it/

Tomi Engdahl says:

LIKE A DRUMBEAT, BROADCOM DOUBLES ETHERNET BANDWIDTH WITH “TOMAHAWK 5”

https://www.nextplatform.com/2022/08/16/like-a-drumbeat-broadcom-doubles-ethernet-bandwidth-with-tomahawk-5/

In a way, the hyperscalers and cloud builders created the merchant switch and router chip market, and did so by encouraging upstarts like Broadcom, Marvell, Mellanox, Barefoot Networks, and Innovium to create chips that could run their own custom network operating systems and network telemetry and management tools. These same massive datacenter operators encouraged upstart switch makers such as Arista Networks, Accton, Delta, H3C, Inventec, Wistron, and Quanta to adopt the merchant silicon in their switches, which put pressure on the networking incumbents such as Cisco Systems and Juniper Networks on several fronts. All of this has compelled the disaggregation of hardware and software in switching and the opening up of network platforms through the support of the Switch Abstraction Interface (SAI) created by Microsoft and the opening up of proprietary SDKs (mostly through API interfaces) of the major network ASIC makers

Tomi Engdahl says:

And sometimes “solving” traffic problems in computer networks can just make things worse. This is now a proven fact.

The Math Proves It—Network Congestion Is Inevitable And sometimes “solving” traffic problems can just make things worse

https://spectrum.ieee.org/internet-congestion-control?share_id=7191736&socialux=facebook&utm_campaign=RebelMouse&utm_content=IEEE+Spectrum&utm_medium=social&utm_source=facebook#toggle-gdpr

MIT researchers discovered that algorithms designed to ensure greater fairness in network communications are unable to prevent situations where some users are hogging all the bandwidth.

Just as highway networks may suffer from snarls of traffic, so too may computer networks face congestion. Now a new study finds that many key algorithms designed to control these delays on computer networks may prove deeply unfair, letting some users hog all the bandwidth while others get essentially nothing.

These congestion-control algorithms aim to discover and exploit all the available network capacity while sharing it with other users on the same network.

Over the past decade, researchers have developed several congestion-control algorithms that seek to achieve high rates of data transmission while minimizing the delays resulting from data waiting in queues in the network. Some of these, such as Google’s BBR algorithm, are now widely used by many websites and applications.

“Extreme unfairness happens even when everybody cooperates, and it is nobody’s fault.”

—Venkat Arun, MIT

However, although hundreds of congestion-control algorithms have been proposed in the last roughly 40 years, “there is no clear winner,” says study lead author Venkat Arun, a computer scientist at MIT. “I was frustrated by how little we knew about where these algorithms would and would not work. This motivated me to create a mathematical model that could make more systematic predictions.”

Unexpectedly, Arun and his colleagues now find many congestion-control algorithms may prove highly unfair. Their new study finds that given the real-world complexity of network paths, there will always be a scenario where a problem known as “starvation” cannot be avoided—where at least one sender on a network receives almost no bandwidth compared to that of other users.

A user’s computer does not know how fast to send data packets because it lacks knowledge about the network, such as how many other senders are on it or the quality of the connection. Sending packets too slowly makes poor use of the available bandwidth. However, sending packets too quickly may overwhelm a network, resulting in packets getting dropped. These packets then need to be sent again, resulting in delays. Delays may also result from packets waiting in queues for a long time.

Congestion-control algorithms rely on packet losses and delays as details to infer congestion and decide how fast to send data. However, packets can get lost and delayed for reasons other than network congestion.

Congestion-control algorithms cannot distinguish the difference between delays caused by congestion and jitter. This can lead to problems, as delays caused by jitter are unpredictable. This ambiguity confuses senders, which can make each of them estimate delay differently and send packets at unequal rates. The researchers found this eventually leads to situations where starvation occurs and some users get shut out completely.

The scientists were surprised to find there were always scenarios with each algorithm where some people got all the bandwidth, and at least one person got basically nothing.

“Some users could be experiencing very poor performance, and we didn’t know about it sooner,” Arun says. “Extreme unfairness happens even when everybody cooperates, and it is nobody’s fault.”

The researchers found that all existing congestion-control algorithms that seek to curb delays are what they call “delay-convergent algorithms” that will always suffer from starvation. The fact that this weakness in these widely used algorithms remained unknown for so long is likely due to how empirical testing alone “could attribute poor performance to insufficient network capacity rather than poor algorithmic decision-making,” Arun says.

Although existing approaches toward congestion control may not be able to avoid starvation, the aim now is to develop a new strategy that does, Arun says. “Better algorithms can enable predictable performance at a reduced cost,” he says.

“We are currently using our method of modeling computer systems to reason about other algorithms that allocate resources in computer systems,”

Tomi Engdahl says:

Broadcom’s First Switch with Co-Packaged Optics is Cloud-Bound

Aug. 24, 2022

Tencent will be the first to deploy the co-packaged optical switch in its cloud data centers.

https://www.electronicdesign.com/technologies/embedded-revolution/article/21248744/electronic-design-broadcoms-first-switch-with-copackaged-optics-is-cloudbound

Broadcom is partnering with Tencent to accelerate the adoption of high-bandwidth co-packaged optics switches. The company said it will deploy its first switch with optics inside, called Humboldt, in the cloud giant’s data centers next year.

The switches are based on a new architecture announced in early 2021 that brings co-packaged optical interconnects inside a traditional switch. The technology—also called CPO, for short—effectively shifts the optics and digital signal processors (DSPs) out of the pluggable modules placed at the very front of the switch, uses silicon photonics to turn them into chiplets, and then puts them in the same package as the ASIC.

Broadcom said the ability to connect fiber-optic cables directly to the switch brings big advantages. The company revealed that Humboldt offers 30% power savings and costs 40% less per bit compared to systems relying on traditional switches.

Tomi Engdahl says:

EPB Launches America’s First Community-wide 25 Gig Internet Service

https://epb.com/newsroom/press-releases/epb-launches-americas-first-community-wide-25-gig-internet-service/

CHATTANOOGA, Tenn. (Aug. 24, 2022)—Continuing the focus on delivering the world’s fastest internet speeds that led Chattanooga’s municipal utility to launch America’s first comprehensively available Gig-speed internet service (2010) and the first 10-Gig internet service (2015), EPB has launched the nation’s first community-wide 25 gigabits per second (25,000 Mbps) internet service to be available to all residential and commercial customers over a 100% fiber optic network with symmetrical upload and download speeds.

Tomi Engdahl says:

Keysight, Nokia Demo First 800GE Readiness and Interoperability Public Test

Aug. 9, 2022

The test solution enables network operators to validate the reliability of high-bandwidth, large-scale 800-Gigabit Ethernet (GE) in service-provider and data-center environments.

https://www.mwrf.com/technologies/test-measurement/article/21248412/microwaves-rf-keysight-nokia-demo-first-800ge-readiness-and-interoperability-public-test

Keysight Technologies collaborated with Nokia to successfully demonstrate the first public 800GE test, validating the readiness of next-generation optics for service providers and network operators.

With the move to the 800GE pluggable optics on front-panel ports, interconnect and link reliability requires a new round of validation cycles to support carrier-class environments. These high-speed interfaces create a unique challenge as new 800GE-capable silicon devices, optical transceivers, and high-bandwidth Ethernet speeds must be accurately tested.

The readiness testing, conducted at Nokia’s private SReXperts customer event in Madrid in June 2022, included Keysight’s AresONE 800GE Layer 1-3 800GE line-rate test platform and the Nokia 7750 Service Routing platform. The AresONE was used to test and qualify Nokia’s FP5 network processor silicon along with 800GE pluggable optics. Specific Nokia platforms used in the validation were the FP5-based 7750 SR-1x-48D supporting 48 ports of 800GE and the 7750 SR-1se supporting 36 ports of 800GE.

Nokia’s FP5 silicon delivers 112G SerDes, which enables 800GE support in hardware.

AresONE 800GE

2/4-port QSFP-DD 800GE test solution

https://www.keysight.com/us/en/products/network-test/network-test-hardware/aresone-800ge.html

Multi-rate Ethernet with each port supporting line-rate 2x400GE, 4x200GE, and 8x100GE

Option to add 1x800GE support per port speed

Leverages Keysight’s IxNetwork to validate high-scale Layer 2/3 multi-protocol networking devices for performance and scalability over 800/400/200/100GE speeds

Tomi Engdahl says:

Next-Gen Data Center Interconnects: The Race to 800G Webcast with Inphi and Microsoft

https://www.youtube.com/watch?v=w1J9SW62ZnI

This one hour webcast explores the path to 800G Coherent and the race to push lane speeds to 224G, enabling 800G PAM4 solutions. We will hear from two experts at Inphi, a global leader in optical interconnect technologies, Dr. Radha Nagarajan, CTO and SVP, and Dr. Ilya Lyubomirsky, Senior Technical Director leading the Systems/DSP Architecture team.

Tomi Engdahl says:

Answering Open Questions in the Race to 800G

July 14, 2022

Operators of large-scale data centers are clamoring for 800G optics, but is the technology ready for prime time? Learn how vendors are navigating uncertainty in the drive to meet market demand.

https://www.mwrf.com/technologies/systems/article/21246566/spirent-communications-answering-open-questions-in-the-race-to-800g

What you’ll learn:

Why the shift to 800G presents unique challenges compared to previous Ethernet evolutions.

The biggest hurdles vendors must clear to bring 800G solutions to customers.

How different stakeholders are testing and validating 800G components to meet market demand.

When building a house, any of a thousand decisions could lead to budget overruns, blown timelines, and big problems down the road. Fortunately, it’s easy to get answers to most questions that arise: check the blueprints. But what if the blueprints are still being drafted even as construction gets underway?

Thankfully, no one builds houses that way. But such a scenario isn’t far off from what vendors must contend with right now in the race to deliver 800-Gigabit Ethernet (800G).

The last big shift in data-center optics, moving from 100G to 400G, progressed over many years, leaving plenty of time for standards bodies to formalize the technology and for vendors to implement it.

The Growing Data-Center Challenge

If it seems like you’re having déjà vu, don’t worry. Yes, the industry did just work through similar questions in the development of 400G optics, and many large-scale networks and data centers only recently adopted them. In the world’s biggest hyperscale data centers, though, skyrocketing bandwidth and performance demands have already exceeded what 400G can deliver.

These trends present a double-edged sword for vendors of data-center network and transmission technologies. On one hand, they find a market positively clamoring for 800G components for lower layers of the protocol stack, even in their earliest incarnations. On the other, big questions remain unanswered about the technology. These gray areas have the potential to create significant problems for customers in interoperability, performance, and even the in the ability to reliably establish links.

The good news is that 800G is based on well-understood 400G technology. Vendors can apply familiar techniques like analyzing forward-error-correction (FEC) statistics to accurately assess physical-layer health. At the same time, jumping from 400G to 800G isn’t as simple as just tweaking some configurations.

Shifting to 112G electrical lanes alone—double the spectral content per lane of 400G technology—represents a huge challenge for the entire industry. Manufacturers in every part of the ecosystem, from circuit boards to connectors to cables to testing equipment, need electrical channel technology that operates well out to twice the symbol rate.

Answering Outstanding Questions

As vendors push forward with 800G solutions, they’re working through such open questions as:

Where are 800G standards going, and what will they ultimately look like?

The most immediate issue facing vendors today is the lack of a mature standard—and, in fact, they’re staring at a scenario with two competing standards in different stages of development. In past technology evolutions, IEEE has served as a kind of lodestar for the industry.

However, while IEEE specifies the 112G electrical lanes referenced above in 802.3CK, they haven’t yet completed their 800G standard. In the meantime, vendors seeking to deliver early solutions are using the only 800G standard that exists today, the Ethernet Technology Consortium’s (ETC) 800GBASE-R.

How will different vendor devices interact?

Working with multiple standards in different stages of development also means that early components may not necessarily support the same electrical transmission capabilities, even for core functions like establishing a link. For example, some 800GBASE-R ASICs that support both Auto-Negotiation (AN) and Link Training (LT) are currently incompatible with ASICs that support only LT.

Which issues that had negligible impact in 400G will now become big problems?

Jumping from 400G to 800G optics means more than a massive speed increase. We’re also doubling the spectrum to use higher frequencies, as well as doubling the sample speed and symbol rate. Suddenly, issues and inefficiencies we didn’t have to worry about in 400G can seriously diminish electrical performance.

One big problem we already know we need to solve: the huge amount of heat generated by 800G optics. Navigating this issue affects everything from the materials used for ASICs to pin spacing, and vendors are still working through all of the implications.

Network equipment manufacturers (NEMs) are working to assure link and application performance under demanding live network conditions. Chipset makers are employing the latest techniques—Layer-1 silicon emulation, software-based traffic emulation, and automated testing workflows—for pre-silicon validation and post-silicon testing. Cable and transceiver vendors are performing exhaustive interoperability testing to validate link establishment and line-rate transmission in multivendor environments. And, as quickly as they can get 800G components, hyperscalers are launching their own extensive testing efforts to baseline network and application performance.

It’s a huge effort—and a testament to the industry’s ability to push new technologies forward, even in the absence of settled standards.

Tomi Engdahl says:

Next-Gen Data Center Interconnects: The Race to 800G Webcast with Inphi and Microsoft

https://www.juniper.net/us/en/the-feed/topics/trending/the-race-to-800g-webcast-with-inphi-and-microsoft.html

Tomi Engdahl says:

The Race to Deliver 800G Ethernet

https://www.everythingrf.com/community/even-as-standards-come-into-focus-the-race-to-deliver-800g-has-already-begun

If you follow the world’s biggest cloud companies, you’ve probably seen momentum building around the next big thing in hyperscale datacenters: 800 Gigabit Ethernet (800G). “Big” is the right adjective. Emerging 800G optics will unleash huge performance gains in these networks, providing much-needed capacity to satisfy customers’ insatiable demand for bandwidth. While the industry can broadly agree on the need for 800G interfaces, however, the path to actually implementing them remains less clear.

Today, those with the biggest stake in delivering early 800G technologies—chipset makers, network equipment manufacturers (NEMs), transceiver and cable vendors—find themselves in a bind. With hyperscale cloud providers clamoring for 800G solutions now, vendors need to start delivering—or stand aside while competitors do. Yet just because customers want 800G, that doesn’t mean the technology is ready for primetime. The industry continues to work through several complicated issues—not least of which, competing standards that remain immature and open to interpretation. Vendors can’t wait for the dust to settle on these questions. They need to move products forward now. So early, comprehensive testing has become crucially important.

Tomi Engdahl says:

NeoPhotonics Charges Forward in 800G Transceiver Race: Week in Brief: 03/04/22

https://www.photonics.com/Articles/Related_NeoPhotonics_Charges_Forward_in_800G/ar67836

Tomi Engdahl says:

New Design Considerations for a CPO or OBO Switch

https://www.onboardoptics.org/cobo-presentations

On July 13th, the Consortium for On-board Optics (COBO) publishes a detailed 46-page industry guidance document addressing the design options ahead for a Co-Packaged or On-Board Optics Switch.

Tomi Engdahl says:

https://techcrunch.com/2022/08/31/firewalla-launches-the-gold-plus-its-new-2-5-gigabit-firewall/

Tomi Engdahl says:

Dell’Oro: Multi-gigabit to comprise only 10% of ports shipped through 2026

July 21, 2022

Meanwhile, the ICT industry analyst projects more than $95 billion to be spent on campus switches over the next five years.

https://www.cablinginstall.com/connectivity/article/14280066/delloro-multigigabit-to-comprise-only-10-of-ports-shipped-through-2026?utm_source=CIM+Cabling+News&utm_medium=email&utm_campaign=CPS220722096&o_eid=7211D2691390C9R&rdx.identpull=omeda|7211D2691390C9R&oly_enc_id=7211D2691390C9R

According to a new report by Dell’Oro Group, an analyst of the telecommunications, networks, and data center industries, more than $95 billion will be spent on campus switches over the next five years — whereas multi-gigabit switches (2.5/5/10 Gbps) are expected to comprise only about 10 percent of port shipments by 2026.

Additional highlights from the analyst’s Ethernet Switch – Campus 5-Year July 2022 Forecast Report include the following data points:

Power-Over-Ethernet (PoE) ports are forecast to comprise nearly half of the total campus port shipments by 2026.

China is expected to recover very quickly from its recent pandemic lockdown and comprise 25 percent of campus switch sales by 2026.

The introduction of new software features and Artificial Intelligence capabilities are expected to increase over the forecast’s timeframe.

Interest in Network-As-a-Service (NaaS) offerings is expected to rise — but the analyst warns that adoption may take time.

“Due to a number of unforeseen headwinds including lingering supply chain challenges, increased macro-economic uncertainties, higher inflation, and regional political conflict, we have lowered our forecast for 2022 and 2023 compared to our prior January report,” explained Sameh Boujelbene, Senior Director at Dell’Oro Group.

Tomi Engdahl says:

Copper in the data center network: Is it time to move on?

July 22, 2022

When it comes to data center cabling, the protracted battle between fiber and copper for supremacy in the access layer is always top of mind. Regardless of which side you’re on, there is no denying the accelerating changes affecting your cabling decision.

https://www.cablinginstall.com/blogs/article/14280135/commscope-copper-in-the-data-center-network-is-it-time-to-move-on?utm_source=CIM+Cabling+News&utm_medium=email&utm_campaign=CPS220722096&o_eid=7211D2691390C9R&rdx.identpull=omeda|7211D2691390C9R&oly_enc_id=7211D2691390C9R

When it comes to data center (DC) cabling, much has been written about the protracted battle between fiber and copper for supremacy in the access layer. Regardless of which side of that debate you’re on, there is no denying the accelerating changes affecting your cabling decision.

Data center lane speeds have quickly increased from 40 Gbps to 100 GBps—and now easily reach 400 GBps in larger enterprise and cloud-based data centers.

Driven by more powerful ASICs, switches have become the workhorses of the data center, such that network managers must decide how to break out and deliver higher data capacities, from the switches to the servers, in the most effective way possible.

About the only thing that hasn’t changed all that much is the relentless focus on reducing power consumption (the horse has got to eat).

Copper’s future in the data center network?

Today, optical fiber is used throughout the DC network—with the exception being the in-cabinet switch-to-server connections in the equipment distribution areas (EDAs). Within a cabinet, copper continues to thrive, for the moment.

Often considered inexpensive and reliable, copper is a good fit for short top-of-rack switch connections and applications less than about 50 GBps.

It may be time to move on, however.

Copper’s demise in the data center has been long projected. Its useful distances continue to shrink, and increased complexity makes it difficult for copper cables to compete against a continuous improvement in fiber optic costs.

Still, the old medium has managed to hang on. However, data center trends—and, more importantly, the demand for faster throughput and design flexibility—may finally signal the end of twisted-pair copper in the data center.

Two of the biggest threats to copper’s survival are its distance limitation and rapidly increasing power requirements—in a world where power budgets are very critical.

As speeds increase, sending electrical signals over copper becomes much more complicated. The electrical transfer speeds are limited by ASIC capabilities, and much more power is required to reach even short distances.

These issues impact the utility of even short-reach direct attached cables (DACs). The alternative fiber optic technologies become compelling for lower cost, power consumption and operational ease.

As switch capacity grows, copper’s distance issue becomes an obvious challenge.

A single 1U network switch now supports multiple racks of servers—and, at the higher speeds required for today’s applications, copper is unable to span even these shorter distances.

As a result, data centers are moving away from traditional top-of-rack designs and deploying more efficient middle-of-row or end-of-row switch deployments and structured cabling designs.

At speeds above 10G, twisted-pair copper (e.g., UTP/STP) deployments have virtually ceased due to design limitations.

A copper link gets power from each end of the link to support the electrical signaling. Today’s 10G copper transceivers have a maximum power consumption of 3-5 watts[i]. While that’s about 0.5-1.0 W less than transceivers used for DACs (see below), it’s nearly 10X more power than multimode fiber transceivers.

Factor in the cost offset of the additional heat generated, and copper operating costs can easily be double that of fiber.

While there are some in the data center who still advocate for copper, justifying its continued use is becoming an uphill battle that becomes too hard to fight. With this in mind let’s look at some alternatives.

Direct attached copper (DAC)

Copper DACs, both active and passive, have stepped in to link servers to switches. DAC cabling is a specialized form of twisted pair. The technology consists of shielded copper cable with plug-in, transceiver-like connectors on either end.

Passive DACs make use of the host-supplied signals, whereas active DACs use internal electronics to boost and condition the electrical signals. This enables the cable to support higher speeds over longer distances, but it also consumes more power.

Active and passive DACs are considered inexpensive.

Active optical cable (AOC) is a step up from both active and passive DAC, with bandwidth performance up to 400 Gbps. Plus, as a fiber medium, AOC is lighter and easier to handle than copper.

However, the technology comes with some serious limitations.

AOC cables must be ordered to length, then replaced each time the DC increases speeds or changes switching platforms. Each AOC comes as a complete assembly (as do DACs) and, thus—should any component fail—the entire assembly must be replaced. This is a drawback when compared to optical transceivers and structured cabling, which provides the most flexibility and operational ease.

Perhaps more importantly, both AOC and DAC cabling are point-to-point solutions.

Pluggable optics

Meanwhile, pluggable optical transceivers continue to improve to leverage the increased capabilities of switch technologies.

In the past, a single transceiver that carried four lanes (a.k.a. quad small form factor pluggable [QSFP]), enabled DC managers to connect four servers to a single transceiver.

This resulted in an estimated 30 percent reduction in network cost (and, arguably, 30 percent lower power consumption) than duplex port-based switches.

Newer switches connecting eight servers per transceiver double down on cost and power savings. Better yet, when the flexibility of structured cabling is added, the overall design becomes scalable.

To that end the IEEE has introduced the P802.3.cm standard and is working on P802.3.db, which seeks to define transceivers built for attaching servers to switches.

Co-packaged optics

Still in the early stages, co-packaged optics (CPOs) move the electrical-to-optical conversion engine closer to the ASIC—eliminating the copper trace in the switch.

The idea is to eliminate the electrical losses associated with the short copper links within the switch to achieve higher bandwidth and lower power consumption.

It is an ambitious objective that will require industry-wide collaboration and new standards to ensure interoperability.

Where we’re headed (sooner than we think)

The march toward 800G and 1.6T will continue; it must if the industry is to have any chance of delivering on customer expectations regarding bandwidth, latency, AI, IoT, virtualization and more.

At the same time, data centers must have the flexibility to break out and distribute traffic from higher-capacity switches in ways that make sense—operationally and financially.

This suggests more cabling and connectivity solutions that can support diverse fiber-to-the-server applications, such as all-fiber structured cabling.

Right now, based on the IEEE Ethernet roadmap, 16-fiber infrastructures can provide a clean path to those higher speeds while making efficient use of the available bandwidth. Structured cabling using the 16-fiber design also enables us to break out the capacity so a single switch can support 192 servers.

In terms of latency and cost savings, the gains are significant.

The $64,000 question

The $64,000 question is: Has copper finally outlived its usefulness?

The short answer is no. There will continue to be certain low-bandwidth, short-reach applications for smaller data centers where copper’s low price point outweighs its performance limitations.

So too, there will likely be a role for CPOs and front-panel-pluggable transceivers. There simply is not a one-size-fits-all solution.

Tomi Engdahl says:

Terabit BiDi MSA forms to develop 800G, 1.6T data center MMF interface specs

March 3, 2022

New consortium of leading optical companies to facilitate adoption of interoperable 800 Gb/s and 1.6 Tb/s BiDi optical interfaces for data centers, leveraging 100 Gb/s VCSEL technology.

https://www.cablinginstall.com/data-center/article/14234913/terabit-bidi-msa-forms-to-develop-800g-16t-data-center-mmf-interface-specs

The Terabit Bidirectional (BiDi) Multi-Source Agreement (MSA) group on Feb. 28 announced its formation as an industry consortium to develop interoperable 800 Gb/s and 1.6 Tb/s optical interface specifications for parallel multimode fiber (MMF).

Founding members of the Terabit BiDi MSA include II-VI, Alibaba, Arista Networks, Broadcom, Cisco, CommScope, Dell Technologies, HGGenuine, Lumentum, MACOM and Marvell Technology.

Leveraging a large installed base of 4-pair parallel MMF links, the new MSA will enable an upgrade path for the parallel MMF based 400 Gb/s BiDi to 800 Gb/s and 1.6 Tb/s.

The MSA notes that BiDi technology has already proven successful as a way of providing an upgrade path for installed duplex MMF links from 40 Gb/s to 100 Gb/s.

Tomi Engdahl says:

Viavi selected by Italy’s Open Fiber as FTTH testing partner

July 26, 2022

Viavi was selected as testing partner for the construction, activation and assurance of the Italian wholesale fiber provider’s ambitious network rollout, which is centered on a push for 100% Gigabit penetration.

https://www.cablinginstall.com/testing/article/14280278/viavi-solutions-viavi-selected-by-italys-open-fiber-as-ftth-testing-partner?utm_source=CIM+Cabling+News&utm_medium=email&utm_campaign=CPS220729030&o_eid=7211D2691390C9R&rdx.identpull=omeda|7211D2691390C9R&oly_enc_id=7211D2691390C9R

Tomi Engdahl says:

Nokia unveils Lightspan SF-8M sealed remote OLT for maximum OSP flexibility

July 25, 2022

The Lightspan SF-8M supports both GPON and XGS-PON connectivity, and is geared for 25G PON. An IP-67-rated sealed enclosure enables OLT installation anywhere in the outside plant, on a strand, inside or outside a cabinet, or on a pole or wall.

https://www.cablinginstall.com/connectivity/article/14280182/nokia-solutions-networks-nokia-unveils-lightspan-sf8m-sealed-remote-olt-for-maximum-osp-flexibility?utm_source=CIM+Cabling+News&utm_medium=email&utm_campaign=CPS220729030&o_eid=7211D2691390C9R&rdx.identpull=omeda|7211D2691390C9R&oly_enc_id=7211D2691390C9R

Tomi Engdahl says:

Passive optical modules maximize existing GPON fiber networks

Aug. 29, 2022

CommScope has launched its Coexistence product line to maximize GPON fiber networks. The company says its CEx passive optical modules enable operators to add next generation PON services over existing fiber infrastructure.

https://www.cablinginstall.com/connectivity/article/14281949/commscope-passive-optical-modules-maximize-existing-gpon-fiber-networks?utm_source=CIM+Cabling+News&utm_medium=email&utm_campaign=CPS220902046&o_eid=7211D2691390C9R&rdx.identpull=omeda|7211D2691390C9R&oly_enc_id=7211D2691390C9R

The new modules integrate into the network near the optical line terminals (OLTs), enabling existing PON services to coexist with XGS-PON, NG-PON2, RF video, and optical time domain reflectometer (OTDR) taps, as well as other current and future technologies.

Tomi Engdahl says:

A market view on 400G flexible networking

https://www.smartoptics.com/article/a-market-view-on-400g-flexible-networking/?utm_medium=email&_hsmi=225518282&_hsenc=p2ANqtz-8uiX65Fs8JV8y-oOT-ybGJEXdi09E8QLfH8iE2EwK-eLuSW4_ZWv2nNba0Cgt9-YW-HUpFN90IRTW7ocELdLgYioQDTfi-7HYBENm6UsWEvrNTOU8&utm_content=225520908&utm_source=hs_email

Kent Lidström, CTO Smartoptics talks about the trends, challenges and opportunities in open optical networking or IP over DWDM. What are the main drivers on the market and what is the future in flexible networking?

Four questions to ask when choosing an optical networking provider

https://www.smartoptics.com/article/four-questions-to-ask-when-choosing-an-optical-networking-provider/?utm_medium=email&_hsmi=225518282&_hsenc=p2ANqtz–BsjuggAfp9SHJpBuYATvmgDSmnHkYkPHEd4xAKW-jCkgmEDcYcgrFI-KFm9XRnzDrsODaB_1yGh4OWs42-smAuvslw3aG99agUyYQ-7Xxbr9fpAE&utm_content=225520908&utm_source=hs_email

1. Who can be a strategic optical networking partner?

2. Who doesn’t have a vested interest in locking you in?

3. Who can you count on for support for your network elements?

4. Who proves they aren’t all talk with satisfied customers?

Tomi Engdahl says:

Ethernet-datakaapelit reaaliaikaiseen valvontaan

https://www.uusiteknologia.fi/2022/09/12/ethernet-datakaapelit-reaaliaikaiseen-valvontaan/

Teollisuudessa ennustava kunnossapito on tärkeä työkalu laitteiden suunnittelemattomien käyttökatkosten välttämiseksi. Tähän tarkoitukseen saksalainen Lapp tarjoaa uudenlaisen Etherline Guard -yksikön, jolla voidaan reaaliajassa valvoa vikaantumisvaarassa olevia datakaapeleita Ethernet-pohjaisissa automaatioverkoissa.

Etherline Guard on kiinteästi asennettava valvontalaite, joka arvioi datakaapelin nykyisen suorituskyvyn ja ilmoittaa sen prosenttiosuutena. Se perustuu tietoihin fyysisistä ominaisuuksista ja niihin liittyvään tiedonsiirtoon anturien kautta.

Saksalaisyritys suosittelee ratkaisuaan erityisesti standardin IEEE 802.3 mukaisille 100BASE-TX (nopeus enintään 100 Mbit/s) mukaisille datakaapeleille, mutta myös EtherCAT-, EtherNET/IP- ja 2-parisiin Profinet-asennuksiin, esimerkiksi yrityksen Etherline Torsion Cat. 5- tai PN Cat. 5 FD -kaapeleille. Uusi laite voidaan asentaa top hat -kiskoihin ja IP 20 -suojausluokituksensa ansiosta ohjauskaapeissa.

Laitteen käyttöjännite on 24 volttia ja suunniteltu toimintalämpötila -40…+75 °C. Laite kestää tärinää ja iskuja standardin DIN EN 60529 mukaisesti.

Uutuudesta on kaksi versiota, joissa kunnossapitotiedot voidaan lähettää korkeamman tason ohjaimeen kytkemällä kolmas datakaapeli LAN-liitäntään (PM03T-versio) tai antennin kautta WiFi-yhteyttä käyttämällä (PM02TWA-versio). Kumpikin versio voidaan yhdistää pilvipalveluun MQTT-yhteyden kautta.

https://www.lappkabel.com/industries/industrial-communication/ethernet/etherline-guard.html

Tomi Engdahl says:

Is This the Future of the SmartNIC?

Sept. 7, 2022

At Hot Chips, AMD outlined a 400-Gb/s SmartNIC that combines ASICs, FPGAs, and Arm CPU cores.

https://www.electronicdesign.com/technologies/embedded-revolution/article/21250090/electronic-design-is-this-the-future-of-the-smartnic?utm_source=EG+ED+Connected+Solutions&utm_medium=email&utm_campaign=CPS220913165&o_eid=7211D2691390C9R&rdx.identpull=omeda|7211D2691390C9R&oly_enc_id=7211D2691390C9R

AMD has swiped serious market share in server CPUs from Intel in recent years. But it has bold ambitions to become a premier supplier in the data center by offering a host of accelerators to complement them.

The Santa Clara, California-based company has poured about $50 billion to buy programmable chip giant Xilinx and startup Pensando, expanding its presence in the market for a relatively new type of networking chip called a SmartNIC. These chips—sometimes called data processing units (DPUs)—are protocol accelerators that plug into servers to offload infrastructure operations vital to running data centers and telco networks from a CPU.

Intelligent Offload

Today, the CPUs at the heart of a server function as a traffic cop for the data center, managing networking, storage, security, and a wide range of other behind-the-scenes workloads that primarily run inside software.

But relying on a CPU to coordinate the traffic in a data center is expensive and inefficient. More than one-third of the CPU’s capacity can be wasted on infrastructure workloads. AMD’s Jaideep Dastidar said in a keynote at Hot Chips that host CPUs in serversare also becoming bogged down by virtual machines and containers. This is all complicated by the rise in demand for high-bandwidth networking and new security requirements.

“All of this resulted in a situation where you had an overburdened CPU,” Dastidar pointed out. “So, to the rescue came SmartNICs and DPUs, because they help offload those workloads from the host CPU.”

These devices have been deployed in the public cloud for years. AWS’s Nitro silicon,classified as a SmartNIC, was designed in-house to offload networking, storage, and other tasks from the servers it rents to cloud users.

Dastidar said the processor at the heart of the 400-Gb/s SmartNIC is playing the same sort of role, isolating many of the I/O heavy workloads in the data center and freeing up valuable CPU cycles in the process.

Hard- or Soft-Boiled

These server networking cards generally combine high-speed networking and a combination of ASIC-like logic or programmable FPGA-like logic, plus general-purpose embedded CPU cores. But tradeoffs abound.

Hardened logic is best when you want to use the SmartNIC to carry out focused, data-heavy workloads because it delivers the best performance per watt. However, this comes at the expense of flexibility.

These ASICs are custom-made for a very specific workload—such as artificial intelligence (AI) in the case of Google’s TPU or YouTube’s Argos—as opposed to regular CPUs that are intended for general-purpose use.

Embedded CPU cores, he said, represent a more flexible alternative for offloading networking and other workloads from the host CPU in the server. But these components suffer from many of the same limitations.

The relatively rudimentary NICs used in servers today potentially have to handle more than a hundred million network packets per second. General-purpose Arm CPU cores lack the processing capacity to devour it all.

“But you can’t overdo it,” said Dastidar. “When you throw a whole lot of embedded cores at the problem, what will end up happening is you recreating the problem in the host CPU in the first place.”

Because of this, many of the most widely used chips in the SmartNIC and DPU category, including Marvell’s Octeon DPU and NVIDIA’s Bluefield, use special-purpose ASICs to speed up specific workloads that rarely change. Then the hardened logic is paired with highly programmable Arm CPU cores that are responsible for the control-plane management.

Other chips from the likes of Xilinx and Intel use the programmable logic at the heart of FPGAs, which bring much faster performance for networking chores such as packet processing. They are also reconfigurable, giving you the ability to fine-tune them for specific functions and add (or subtract) different features at will.

There are tradeoffs: Server FPGAs are very expensive compared with ASICs and CPUs. “Here, too, you don’t want to overdo it because then the FPGA will start comparing unfavorably [to ASICs],” said Dastidar.

Three-in-One SoC

Instead of playing tug-of-war with different architectures, AMD said that it plans to combine all three building blocks in a single chip to strike the best possible balance of performance, power efficiency, and flexibility.

According to AMD, this allows you to run workloads on the ASIC, FGPA, or Arm CPU subsystem that is most appropriate. Alternatively, you can divide the workload and distribute the functions to different subsystems.

“It doesn’t matter if one particular heterogeneous component is solving one function or a combination of heterogeneous functions. From a software perspective, it should look like one system-on-a-chip,” said Dastidar.

The architecture of the upcoming high-performance SmartNIC is comparable to the insides of Xilinx’s Versal accelerator family, which is based on what Xilinx calls its Adaptive Compute Acceleration Platform (ACAP).

AMD said the SmartNIC, based on 7-nm technology from TSMC, will leverage the hardened logic where it works best: Offloading cryptography, storage, and networking data-plane workloads that require power-efficient acceleration.

Tomi Engdahl says:

SmartNIC Accelerating the Smart Data Center

Network interface cards are a lot smarter than they used to be.

https://www.electronicdesign.com/magazine/51282

Tomi Engdahl says:

HYPERSCALERS AND CLOUDS SWITCH UP TO HIGH BANDWIDTH ETHERNET

https://www.nextplatform.com/2022/09/13/hyperscalers-and-clouds-switch-up-to-high-bandwidth-ethernet/

Nothing makes this more clear than the recent market research coming out of Dell’Oro Group and IDC, which both just finished casing the Ethernet datacenter switch market for the second quarter of 2022.

According to Dell’Oro, datacenter switching set a new record for revenues for any second quarter in history and also for any first six months of any year since it has been keeping records. Sameh Boujelbene, senior research director at Dell’Oro, was kind enough to share some insights on the quarter and forecast trends so we can share them with you. (But if you want to get all of the actual figures, you need to subscribe to Dell’Oro’s services, as is the case with IDC data, too.)

In the second quarter, according to Boujelbene, sales of switches into the datacenter grew by more than 20 percent. (Exactly how much, the company is not saying publicly. That strong double-digit growth is despite some pretty big supply chain issues that the major switch makers are having, notably Cisco Systems and Arista Networks, the two biggest suppliers in this part of the market. While the growth was broad across a bunch of vendors – meaning that most of them grew – only Arista Networks and the white box collective were able to grow their revenue share in the second quarter. Everyone else grew slower than the market and therefore lost market share.

At the high end of the datacenter switching market, with 200 Gb/sec and 400 Gb/sec devices, the number of ports running at these two speeds to combine to almost reach 2 million ports, which was more than 10 percent of all Ethernet ports shipped in Q2 and nearly 20 percent of all Ethernet switch port revenue in Q2. (The revenue split between 200 Gb/sec and 400 Gb/sec was about even, which means somewhere less than two-thirds of those 2 million ports were for 200 Gb/sec devices and somewhere slightly more than one-third of those ports were for 400 Gb/sec devices. The hyperscalers and cloud builders drove a lot of this demand.

“The forecast for 200 Gb/sec and 400 Gb/sec Ethernet is for these to more than double revenue in 2022,” says Boujelbene. “This growth is propelled by an accelerated adoption from Microsoft and Meta Platforms in addition to Google and Amazon. These latter two cloud service providers started adoption of these higher-speed Ethernet options way back in 2018 and 2019. Both 200 GE and 400 GE are forecast to more than double in revenue in 2022. The 200 Gb/sec and 400 Gb/sec Ethernet switch revenues comprised about 10 percent of the total Ethernet datacenter switch market in 2021 and is forecast to more than double in 2022, and in 2022 and 2023, we expect the 200 Gb/sec and 400 Gb/sec to continue to be driven mostly by the large Tier 1 as well as some select Tier 2 cloud service providers, while the rest of the market will remain predominantly on 100 Gb/sec Ethernet.”