Innovation is about finding a better way of doing something. Like many of the new development buzzwords (which many of them are over-used on many business documents), the concept of innovation originates from the world of business. It refers to the generation of new products through the process of creative entrepreneurship, putting it into production, and diffusing it more widely through increased sales. Innovation can be viewed as t he application of better solutions that meet new requirements, in-articulated needs, or existing market needs. This is accomplished through more effective products, processes, services, technologies, or ideas that are readily available to markets, governments and society. The term innovation can be defined as something original and, as a consequence, new, that “breaks into” the market or society.

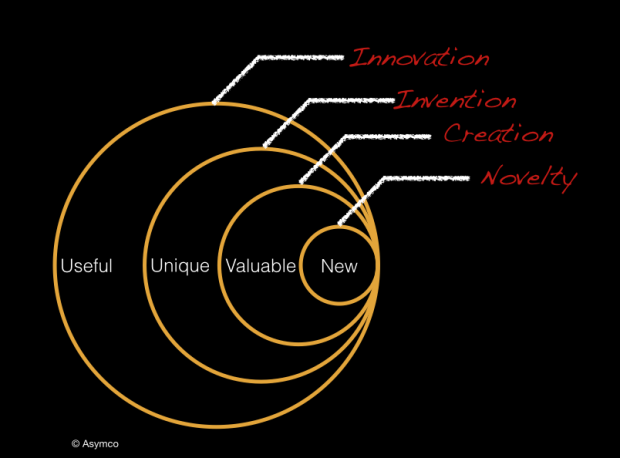

Innoveracy: Misunderstanding Innovation article points out that there is a form of ignorance which seems to be universal: the inability to understand the concept and role of innovation. The way this is exhibited is in the misuse of the term and the inability to discern the difference between novelty, creation, invention and innovation. The result is a failure to understand the causes of success and failure in business and hence the conditions that lead to economic growth. The definition of innovation is easy to find but it seems to be hard to understand. Here is a simple taxonomy of related activities that put innovation in context:

- Novelty: Something new

- Creation: Something new and valuable

- Invention: Something new, having potential value through utility

- Innovation: Something new and uniquely useful

The taxonomy is illustrated with the following diagram.

The differences are also evident in the mechanisms that exist to protect the works: Novelties are usually not protectable, Creations are protected by copyright or trademark, Inventions can be protected for a limited time through patents (or kept secret) and Innovations can be protected through market competition but are not defensible through legal means.

Innovation is a lot of talked about nowdays as essential to businesses to do. Is innovation essential for development work? article tells that innovation has become central to the way development organisations go about their work. In November 2011, Bill Gates told the G20 that innovation was the key to development. Donors increasingly stress innovation as a key condition for funding, and many civil society organisations emphasise that innovation is central to the work they do.

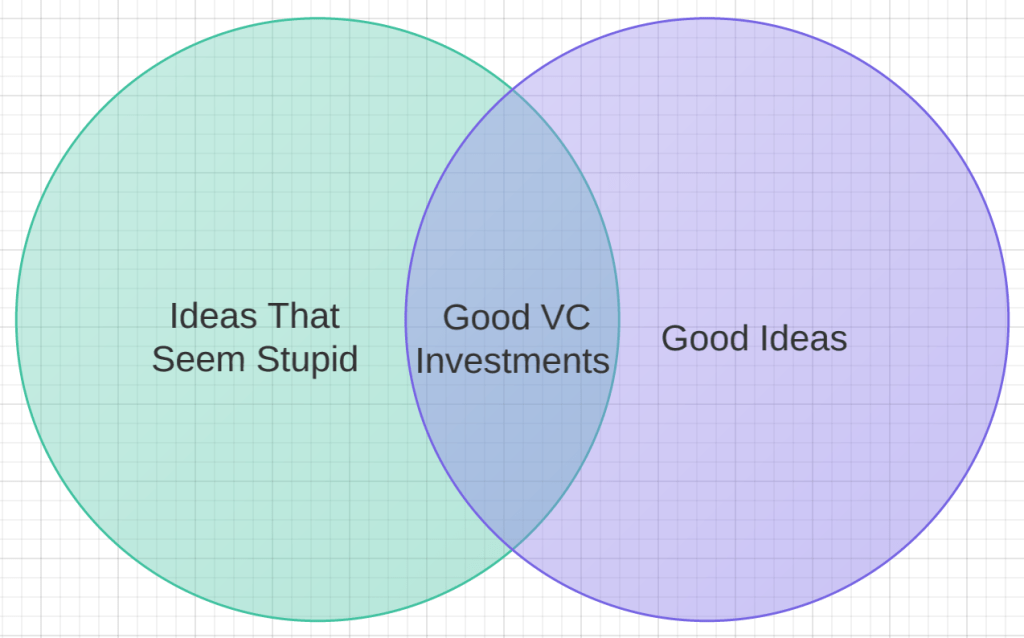

Some innovation ideas are pretty simple, and some are much more complicated and even sound crazy when heard first. The is place for crazy sounding ideas: venture capitalists are gravely concerned that the tech startups they’re investing in just aren’t crazy enough:

Not all development problems require new solutions, sometimes you just need to use old things in a slightly new way. Development innovations may involve devising technology (such as a nanotech water treatment kit), creating a new approach (such as microfinance), finding a better way of delivering public services (such as one-stop egovernment service centres), identifying ways of working with communities (such as participation), or generating a management technique (such as organisation learning).

Theorists of innovation identify innovation itself as a brief moment of creativity, to be followed by the main routine work of producing and selling the innovation. When it comes to development, things are more complicated. Innovation needs to be viewed as tool, not master. Innovation is a process, not a one time event. Genuine innovation is valuable but rare.

There are many views on the innovation and innvation process. I try to collect together there some views I have found on-line. Hopefully they help you more than confuze. Managing complexity and reducing risk article has this drawing which I think pretty well describes innovation as done in product development:

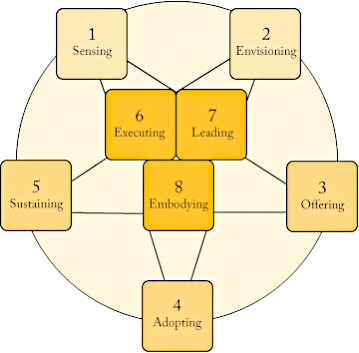

8 essential practices of successful innovation from The Innovator’s Way shows essential practices in innovation process. Those practices are all integrated into a non-sequential, coherent whole and style in the person of the innovator.

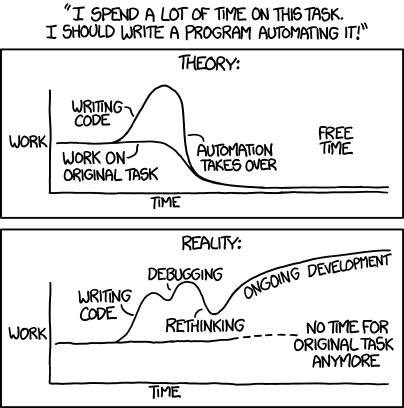

In the IT work there is lots of work where a little thinking can be a source of innovation. Automating IT processes can be a huge time saver or it can fail depending on situation. XKCD comic strip Automation as illustrates this:

System integration is a critical element in project design article has an interesting project cost influence graphic. The recommendation is to involve a system integrator early in project design to help ensure high-quality projects that satisfy project requirements. Of course this article tries to market system integration services, but has also valid points to consider.

Core Contributor Loop (CTTDC) from Art Journal blog posting Blog Is The New Black tries to link inventing an idea to theory of entrepreneurship. It is essential to tune the engine by making improvements in product, marketing, code, design and operations.

4,506 Comments

Tomi Engdahl says:

Samuli Bergström: User interface design is challenging

The user interface makes the product, either successful or unsuccessful. Whether it’s a mobile phone or a production system for testing the interface defines what the user experience of the product will eventually emerge.

Why is the user interface design is so challenging? The most common reason for their failure is due to the fact that, especially engineers we easily forget, to whom we are designing the user interface.

The same is true, for example, Internet sites. How often have I come to find a number of companies and educational site as simple a thing as a business address. Many other information from there, but tends beyond her business address, or it is hidden somewhere, where it is almost impossible to find.

Engineers designing user interfaces very often to themselves and not necessarily the production operators.

So what makes a good user interface? A good user interface is very simple and its design is based on the co-operation the end customer.

You should also ask the operators, where the system is the best user interfaces and in the worst of all. Similarly, you should take into account what kind of user interfaces have been in the past.

New winds user interfaces have made internet sites as well as mobile phones and tablets. Ideas for a new way to make user interfaces that exudes from every direction. Touch screens will be already familiar to young children and they become more

The fingers when they do not fully replace the mouse, especially precision. I gotta think about the push buttons on the placement and size, in order to avoid damage. Ready-made design rules, fortunately, can be found online for free for various platforms such as iOS and Android, and they can also be used in other application fields.

Also the color scheme Internet sites and mobile / tablet applications is much richer than the production of test systems in gray and boring windows.

A good user interface is easy to use, visually pleasing, informative and forgiving to potential errors made by the user. Such development, however, requires close co-operation with the customer and the courage to try something new

Source: http://www.etn.fi/index.php?option=com_content&view=article&id=2226:samuli-bergstrom-kayttoliittyman-suunnittelu-on-haastavaa&catid=9&Itemid=139

Tomi Engdahl says:

Connectivity a game changer for embedded design

http://www.edn.com/electronics-blogs/embedded-insights/4437822/Connectivity-a-game-changer-for-embedded-design

Whether wired or wireless, adding network connectivity to an embedded system is a potential game changer. Connectivity, especially to the Internet, brings a host of opportunities and challenges not seen in stand-alone designs. But you have to challenge your assumptions about what your embedded system can and must do as part of its normal operation.

Many embedded systems already have some form of connectivity to other devices, often to exchange data and commands. Remote user interfaces are a similar application of connectivity. Typically these occur over either a direct connection or a local network of some kind.

But connectivity, especially over a wide area, can bring much more to embedded systems. The key is envisioning the rest of the network as an extension of the embedded system and considering the operation of it all as a single device. With that in mind, a wealth of opportunities becomes available to an embedded design as certain constraints fall away.

Embedded Design Profession Under Siege

http://www.edn.com/electronics-blogs/embedded-insights/4438263/Embedded-Design-Profession-Under-Siege

In the early years, microprocessors were a generally unknown technology. Those of us working with them needed an intimate understanding of how they functioned. We worked in assembly language, often compiling by hand, and generally had to develop our own development tools. Most of our management had no clue how these system functioned.

Embedded systems developers formed communities, and specialized educational channels such as Dr. Dobbs Journal and the Embedded Systems Conference arose. Still, the state of the art continued advancing rapidly and those who were practitioners of the art remained specialists who could command respect and could earn great rewards.

But we seem to have done our job too well. The computing element at the heart of our designs became so ubiquitous that its abilities are now taken for granted. Our children grew up with computers a household item, and our grandchildren play with toys that outperform the supercomputers of those early days. Programming has become a wide-spread skill, making millionaires out of clever smartphone apps developers, and grade-school children are engaged in robot competitions.

Development tools, software libraries, and off-the-shelf processor modules have also become commonplace, and incredibly inexpensive if not completely free. Even professional-level mechanical and system design tools are now freely available to students. Anyone can become an embedded developer and do so easily. Those of us who have been trained in the profession have only our years of experience to differentiate ourselves from this wider developer community. Meanwhile, the industries in which we work are shifting to embrace the innovation and creativity these new developers bring to the table.

So where does this leave those of us who have been professional embedded developers? It’s hard to say.

But processors are getting faster and memory cheaper, so efficiency in programming is becoming less important. They’re also getting cheaper, and product lifetimes becoming shorter. Cost optimization of design is thus becoming less critical, and the time required to optimize is becoming an impediment to market success.

Embedded systems development as a profession is under siege. Companies like Oracle want to turn the millions of Java web programmers into embedded developers for the Internet of Things. Low-cost development boards such as the Arduino are reducing the need for electronics expertise to the level that anyone with a hint of cleverness can turn an idea into a working prototype. Experienced embedded systems professionals may well find themselves relegated to specialty areas such as hard real-time system

Tomi Engdahl says:

Erik Meijer: AGILE must be destroyed, once and for all

Enough of the stand-up meetings please, just WRITE SOME CODE

http://www.theregister.co.uk/2015/01/08/erik_meijer_agile_is_a_cancer_we_have_to_eliminate_from_the_industry/

A couple of months back, Dutch computer scientist Erik Meijer gave an outspoken and distinctly anti-Agile talk at the Reaktor Dev Day in Finland.

“Agile is a cancer that we have to eliminate from the industry,” said Meijer; harsh words for a methodology that started in the nineties as a lightweight alternative to bureaucratic and inflexible approaches to software development.

The Agile Manifesto is a statement from a number of development gurus espousing four principles:

Individuals and interactions over processes and tools

Working software over comprehensive documentation

Customer collaboration over contract negotiation

Responding to change over following a plan

What is wrong with Agile? Meijer’s thesis is that “we talk too much about code, we don’t write enough code.”

Stand-up meetings in Scrum (a common Agile approach) are an annoying interruption at best, or at worst one of the mechanisms of “subtle control”, where managers drive a team while giving the illusion of shared leadership. “We should end Scrum and Agile,” he says. “We are developers. We write code.”

Even test driven development, where developers write tests to subsequently verify the behaviour of their code, comes in for a beating. “This is so ridiculous. Do you think you can model the real failures that happen in production? No,” says Meijer.

He advocates instead a “move fast and break things” model, where software is deployed and errors fixed as they are discovered.

However, not all of Meijer’s arguments stand up.

“It’s not Agile that sucks, and dragging programmers down. It’s the inability of programmers and business people to understand each other,”

Like Meijer though, Ferrier is no fan of Scrum. “Sprints are utterly ridiculous ways to produce software,” he says, referring to the short-term targets which form part of Scrum methodology.

Meijer quotes “pragmatic programmer” Dave Thomas, another Manifesto signatory:

“The word ‘agile’ has been subverted to the point where it is effectively meaningless, and what passes for an agile community seems to be largely an arena for consultants and vendors to hawk services and products.”

Tomi Engdahl says:

Meet an engineer mentor, be an engineer mentor in 5 minutes

http://www.edn.com/electronics-blogs/engineering-the-next-generation/4438224/Meet-a-mentor–be-a-mentor-in-5-minutes?_mc=NL_EDN_EDT_EDN_funfriday_20150109&cid=NL_EDN_EDT_EDN_funfriday_20150109&elq=0fe235bd0f614dc29b2bc7fc1ba72ebb&elqCampaignId=21100

Mentoring seems to be almost a natural instinct for engineers. Engineers see someone in need of a helping hand and step in, just like they would if a design needed help.

But, still, it’s a time consuming act that, if done long term, can require significant commitment. And let’s be honest, not every person out there is looking to learn (or is worth your time).

Tomi Engdahl says:

Online learning, EE or otherwise

http://www.edn.com/electronics-blogs/benchtalk/4438087/Online-learning–EE-or-otherwise?_mc=NL_EDN_EDT_pcbdesigncenter_20150112&cid=NL_EDN_EDT_pcbdesigncenter_20150112&elq=9c484b91ec0b4f2c98e53346cb9afefb&elqCampaignId=21104

I wish I had a few dozen lifetimes to enjoy all the online learning opportunities now available. Do you need to brush up on some engineering or math concepts? There’s an app for that.

Most online learning is still passive and traditional. You listen to lectures and perhaps complete exercises and tests. But as momentum builds behind the concept, we’ll start to see more intelligence and personalization in our cybernetic tutors.

Tomi Engdahl says:

Disney, McLaren and National Rail walk into bar: Barkeep, make me a Wearable

You tryin’ to be a smartwatch, pal?

http://www.theregister.co.uk/2015/01/13/innovate_uk_disney_and_mclaren_jump_on_wearable_tech_bandwagon/

A £210,000 fund has been put up by Innovate UK to promote development of wearable tech and big name companies have jumped at the chance to be associated with the coolest thing since “apps” and “cloud” became passé.

Innovate UK is what used to be known as the Technology Strategy Board and is the governmental organisation that funds various projects to develop different aspects of technology.

The prize fund is divided into six categories, each of which gets £35,000

Which all sounds like someone need to build an NFC wristband with an associated app.

Tomi Engdahl says:

Computing Teachers Concerned That Pupils Know More Than Them

http://www.i-programmer.info/news/150-training-a-education/8169-computing-teachers-concerned-that-pupils-know-more-than-them.html

A survey of UK schools carried out by Microsoft and Computing at School reveals some worrying statistics that are probably more widely applicable.

The UK is working hard to introduce a new emphasis on computer science at school but, as always, the problem is getting the teachers up to speed. With computing there is the added difficulty that if a teacher is a good programmer or just a good sys admin then they can probably earn a lot more elsewhere.

The survey revealed that (68%) of primary and secondary teachers are concerned that their pupils have a better understanding of computing than they do. Moreover the pupils reinforced this finding with 47% claiming that their teachers need more training. Again to push the point home, 41% of pupils admitted to regularly helping their teachers with technology.

On the plus side, 69% of the teachers said that they enjoyed teaching the new computing curriculum and 73% felt confident in delivering it. However, 81% still thought that they needed more training, development and learning materials.

The real problem is that people who know about computing aren’t generally lured into teaching.

Tomi Engdahl says:

Do not external – at least in e-commerce

Right kind of resources for the development of e-commerce. He stresses that knowledge is constructed so that it is not in the hands of consultants, but they themselves in their own house.

“As I have discussed, for example, British or Dutch online merchants, all of them have told us that they have the hackers, inside the house. It is, after according to them, the entire core business, “Malinen says.

In his opinion, the fact that your house is how to code, hard analytics knowledge, as well as the psychology of understanding, lies in the staggering force. And, in his opinion, it should be strictly in-house

Certain types of companies are growing more and more of its own e-commerce expertise and buying in fewer consultants.

“The fact that, in principle, be outsourced all, is not always a smart thing. You may be better to help in other ways, ”

What is needed is a multi-skilled

“Through the testing found really matters affecting sales, and focused on making it. In business, analytics and IT side will be very close to each other. There are also such wonders, that they are in the same package, ”

This is going to be difficult to place in the labor market, the need to find a really good analysts with sufficient technical skills.

“Mathematicians and statisticians can be found. A big gap arises when they should be in the digital competence. And that is also generated when the coder should understood statistical science. Math and economy people often lack network development layer ”

In analytics there is already seen a shortage of labor.

“Finland’s small labor market, such a talent is not terribly easy to find,”

Source: http://summa.talentum.fi/article/tv/12-2014/124314

Tomi Engdahl says:

Bloopers Book Helps Improve GUI Development

http://www.eetimes.com/author.asp?section_id=31&doc_id=1325273&

With the rising popularity of touchscreen controls, the need for well-considered graphical user interfaces (GUIs) has become paramount. This book can help.

GUI Bloopers 2.0 by Jeff Johnson of UI Wizards. I found it an interesting read chock full of helpful suggestions that should probably be in the library of any development team tasked with developing a graphical user interface (GUI). Given the prevalence of touch screens and graphical panels today, that group probably means most embedded developers.

One of the first things you notice about the book is that it avoids criticizing, embarrassing, or amusing with tales of GUIs gone wrong.

“My aim is not to provide a parade of UI howlers …. My purpose is to help GUI designers and developers learn to produce better GUIs.” To this end he provides concrete examples of both ill- and well-formed designs, articulating the nature of a blooper, the probable reasons for its occurrence, and steps needed to mitigate the situation.

As the primary means your customer has of interacting with your product, the user interface deserves extra care and attention. Implemented properly the GUI can build customer confidence and brand loyalty as well as your product’s reputation just as much as the product’s performance at its intended activity. Implemented badly, the GUI can drive customers away regardless of the product’s abilities.

Tomi Engdahl says:

Medical Innovation or Quackery?

http://www.quackometer.net/blog/2014/12/medical-innovation-or-quackery.html

One of the common marketing messages behind alternative medicine is that is represents true innovation in healthcare. Using one Prince Charles’ favourite words, it tries to offer “integrative” services by combining the best of ‘Western’, or orthodox, medicine with ancient traditions, Eastern practices and New Age ways of knowing.

But at the heart of this debate then is what we mean by the words ‘medical innovation’. To one person, a treatment might be innovative; to another, straightforward quackery.

Accepted medical treatments ought to have an appropriate level of evidence to overcome any doubts regarding their plausibility. Extrordinary claims ought to have lots of evidence. Unambiguouss treatments, where outcomes are binary and unquestionable, probably need a lower level of evidence.

So, innovative treatments are those that exist below this threshold but are not implausible.

Doctors are well able to currently use innovative treatments as long as they are backed by sound medical and scientific reasons as to who they would be in the best interests of patients. Current law protects such doctors and they do not fear litigaiton. Where fears of litigaiton exist is when a doctor would use a treatment that sits towards the lower left quadrant. The Saatchi Bill does nothing for the innovative treatments, but is allowing doctors to act with impunity in the quadrant of quackery.

Tomi Engdahl says:

Why the Future Will be Made by Creators, Not Consumers | WIRED

http://www.wired.com/2014/12/future-made-by-creators-not-consumers/

Tomi Engdahl says:

All Our Students Thinking

http://www.ascd.org/publications/educational-leadership/feb08/vol65/num05/All-Our-Students-Thinking.aspx

One stated aim of almost all schools today is to promote critical thinking. But how do we teach critical thinking? What do we mean by thinking?

In an earlier issue on the whole child (September 2005), Educational Leadership made it clear that education is rightly considered a multipurpose enterprise. Schools should encourage the development of all aspects of whole persons: their intellectual, moral, social, aesthetic, emotional, physical, and spiritual capacities.

How to teach all students to think critically

http://theconversation.com/how-to-teach-all-students-to-think-critically-35331

So what should any mandatory first year course in critical thinking look like? There is no single answer to that, but let me suggest a structure with four key areas:

argumentation

logic

psychology

the nature of science.

Tomi Engdahl says:

Let’s Teach Students to Think Critically, Not Test Mindlessly

http://www.huffingtonpost.com/eric-cooper/lets-teach-students-to-th_b_4556320.html

Tomi Engdahl says:

How To Teach All Students To Think Critically

http://www.iflscience.com/how-teach-all-students-think-critically

So what should any mandatory first year course in critical thinking look like? There is no single answer to that, but let me suggest a structure with four key areas:

argumentation

logic

psychology

the nature of science.

I will then explain that these four areas are bound together by a common language of thinking and a set of critical thinking values.

1. Argumentation

The most powerful framework for learning to think well in a manner that is transferable across contexts is argumentation.

Arguing, as opposed to simply disagreeing, is the process of intellectual engagement with an issue and an opponent with the intention of developing a position justified by rational analysis and inference.

2. Logic

Logic is fundamental to rationality. It is difficult to see how you could value critical thinking without also embracing logic.

People generally speak of formal logic – basically the logic of deduction – and informal logic – also called induction.

3. Psychology

The messy business of our psychology – how our minds actuality work – is another necessary component of a solid critical thinking course.

4. The Nature Of Science

It is useful to equip students with some understanding of the general tools of evaluating information that have become ubiquitous in our society. Two that come to mind are the nature of science and statistics.

Learning about what the differences are between hypotheses, theories and laws, for example, can help people understand why science has credibility without having to teach them what a molecule is, or about Newton’s laws of motion.

The Language Of Thinking

Embedded within all of this is the language of our thinking. The cognitive skills – such as inferring, analysing, evaluating, justifying, categorising and decoding – are all the things that we do with knowledge.

Tomi Engdahl says:

While larger organizations will doubtless continue to have some specialized IT roles, more and more IT managers will need to become generalists. They’ll have to adapt to broader roles that reflect less focus on narrow technology stovepipes—like storage, networking, or virtualization.

“We need to be innovation leaders for the organization, offering new capabilities and showing how technology can be deployed to further business advantage.”

Source: http://www.forbes.com/sites/netapp/2014/01/14/why-it-went-hybrid/

Tomi Engdahl says:

Why the Future Will be Made by Creators, Not Consumers

http://www.wired.com/2014/12/future-made-by-creators-not-consumers/

The ability to code enables young people to become creators rather than consumers. Students with this creative capacity and technical literacy will hold the power in the future. They are the next generation of entrepreneurs

Tomi Engdahl says:

If you are unfamiliar with ‘the chasm’, this is a reference to an acclaimed technology marketing book by Geoffrey A. Moore in 1991.

The first, consumers described as innovators, are on the very bleeding edge. Innovators have a combination of unique interest in the subject area and abundant disposable income. There is a small chasm between this group and the next. This small chasm has killed many technologies and you could probably argue that 3D TVs died here.

Next, early adopters are more like typical AnandTech readers. These consumers are technologically savvy and often the technology go-to person for groups of family and friends. Early adopters are likely to have made investments into products that their friends have yet to invest in themselves.

This brings us to The Big Scary Chasm in Question. How does a technology explode from a “hobby”, as famously Apple described its Apple TV, to a staple like the iPhone? In the information age of today, crossing this chasm is primarily a focus of marketing. Sure, you need good product, but without effective marketing there is little chance of wide adoption. There are plenty examples of products that have been favorably reviewed by AnandTech and others but didn’t see widespread adoption.

Often, it’s a case of competing against the marketing budget of a much larger company, but that’s a topic for another day. In short, going from a cult hit to a market leader is difficult; hence, the Chasm.

Source: http://www.anandtech.com/show/8810/wearables-2014-and-beyond

Tomi Engdahl says:

Why Some Teams Are Smarter Than Others

http://science.slashdot.org/story/15/01/19/0249200/why-some-teams-are-smarter-than-others

Everyone who is part of an organization — a company, a nonprofit, a condo board — has experienced the pathologies that can occur when human beings try to work together in groups. Now the NYT reports on recent research on why some groups, like some people, are reliably smarter than others.

Why Some Teams Are Smarter Than Others

http://www.nytimes.com/2015/01/18/opinion/sunday/why-some-teams-are-smarter-than-others.html

ENDLESS meetings that do little but waste everyone’s time. Dysfunctional committees that take two steps back for every one forward. Project teams that engage in wishful groupthinking rather than honest analysis. Everyone who is part of an organization — a company, a nonprofit, a condo board — has experienced these and other pathologies that can occur when human beings try to work together in groups.

But does teamwork have to be a lost cause? Psychologists have been working on the problem for a long time. And for good reason: Nowadays, though we may still idolize the charismatic leader or creative genius, almost every decision of consequence is made by a group.

Psychologists have known for a century that individuals vary in their cognitive ability. But are some groups, like some people, reliably smarter than others?

Individual intelligence, as psychologists measure it, is defined by its generality: People with good vocabularies, for instance, also tend to have good math skills, even though we often think of those abilities as distinct. The results of our studies showed that this same kind of general intelligence also exists for teams. On average, the groups that did well on one task did well on the others, too. In other words, some teams were simply smarter than others.

Instead, the smartest teams were distinguished by three characteristics.

First, their members contributed more equally to the team’s discussions, rather than letting one or two people dominate the group.

Second, their members scored higher on a test called Reading the Mind in the Eyes, which measures how well people can read complex emotional states from images of faces with only the eyes visible.

Continue reading the main story Continue reading the main story

Continue reading the main story

Finally, teams with more women outperformed teams with more men. Indeed, it appeared that it was not “diversity” (having equal numbers of men and women) that mattered for a team’s intelligence, but simply having more women. This last effect, however, was partly explained by the fact that women, on average, were better at “mindreading” than men.

Emotion-reading mattered just as much for the online teams whose members could not see one another as for the teams that worked face to face. What makes teams smart must be not just the ability to read facial expressions, but a more general ability, known as “Theory of Mind,” to consider and keep track of what other people feel, know and believe.

Tomi Engdahl says:

Japanese Nobel Laureate Blasts His Country’s Treatment of Inventors

http://yro.slashdot.org/story/15/01/20/0059203/japanese-nobel-laureate-blasts-his-countrys-treatment-of-inventors

Shuji Nakamura won the 2014 Nobel Prize in Physics (along with two other scientists) for his work inventing blue LEDs. But long ago he abandoned Japan for the U.S. because his country’s culture and patent law did not favor him as an inventor. Nakamura has now blasted Japan for considering further legislation that would do more harm to inventors.

There is a similar problem with copyright law in the U.S., where changes to the law in the 1970s and 1990s have made it almost impossible for copyrights to ever expire. The changes favor the corporations rather than the individuals who might actually create the work.

Nobel laureate Shuji Nakamura is still angry at Japan

http://news.sciencemag.org/asiapacific/2015/01/nobel-laureate-shuji-nakamura-still-angry-japan

In the early 2000s, Nakamura had a falling out with his employer and, it seemed, all of Japan. Relying on a clause in Japan’s patent law, article 35, that assigns patents to individual inventors, he took the unprecedented step of suing his former employer for a share of the profits his invention was generating. He eventually agreed to a court-mediated $8 million settlement, moved to the University of California, Santa Barbara (UCSB), and became an American citizen. During this period he bitterly complained about Japan’s treatment of inventors, the country’s educational system, and its legal procedures.

During a visit to Japan last week, Nakamura, now 60 and still at UCSB, gave a press conference where he was asked about Japan’s research environment in light of his Nobel recognition. A summary of his comments, edited for brevity and clarity, follows.

On whether Japan has changed in terms of rewarding inventors: “Before my lawsuit, the typical compensation fee [to inventors for assigning patents rights] was a special bonus of about $10,000. But after my litigation, all companies changed [their approach]. The best companies pay a few percent of the royalties or licensing fee [to the inventors].”

On why Japanese companies have lost ground internationally: “Japan is very good at making products—semiconductors, cell phones, TVs, solar cells. But they could sell only in the domestic market. They couldn’t sell outside of Japan. Their globalization is very bad. The reason is probably the language problem. Japanese are the worst [in terms of] English performance.”

On Japanese and Asian education: “The Japanese entrance exam system is very bad. And China, Japan, Korea are all the same. For all high school students, their education target is to enter a famous university. I think the Asian educational system is a waste of time. Young people [should be able] to study different things.”

On Japan’s courts: “The Japanese legal system is the worst in the world. If there is a lawsuit in the U.S., first there is a discovery process. We have to give all the evidence to the lawyers. In Japan there is no discovery process.”

On economic and educational reform in Japan: “I think that until the Japanese economy collapses, no changes will happen at all.”

On the benefits of winning a Nobel Prize: “I don’t have to teach anymore, and I get a parking space. That’s all I got from the University of California.”

Tomi Engdahl says:

Green Screens & Ham (Apologies to Dr. Seuss)

http://www.edn.com/electronics-blogs/benchtalk/4438356/Green-Screens—Ham–Apologies-to-Dr–Seuss-?_mc=NL_EDN_EDT_EDN_weekly_20150122&cid=NL_EDN_EDT_EDN_weekly_20150122&elq=877157bf14d148eaa578e0df5b8cff85&elqCampaignId=21276

Is ham radio still a gateway to engineering?

I got the license mainly because I wanted to start messing with RF design, and it seemed like the thing to do.

What about you? Was ham a gateway to electronics? Are you still active?

Tomi Engdahl says:

News & Analysis

Motion Control Comes to Masses with TI Launchpad

http://www.eetimes.com/document.asp?doc_id=1325405&

Since the advent of the Arduino, a flood of low-cost development boards have become available to help speed and simplify embedded systems development. Now, motion control of 3-phase DC motors has one of its own. Based on the Texas Instruments C2000 Piccolo MCU, the InstaSPIN-MOTION Launchpad comes pre-loaded with motion control software for just $25.

The F2806x is part of the TI Launchpad modular development board series

The pre-loaded motor control libraries greatly simplify the development of motion control systems using brushless DC motors. “With brushed DC motors you just apply power and it turns,”

“but you have to deliberately commutate brushless motors, energizing the coils at the right times.”

Development boards such as these are part of a larger trend toward simplifying and speeding development of complex mechatronic systems while minimizing the need for special expertise. “Professionals and Makers alike want to be able to rapidly prototype solutions at lower cost,”

Tomi Engdahl says:

VIDEO: Future Dr. Visits May Include Telemedicine, Sensors & Mobile Clinics — Kaiser Permanente and other companies are applying everything from telehealth to monitoring sensors to mobile clinics to reimagine the conventional doctor’s appointment.

VIDEO: Seeing the Doctor of the Future

https://ssl.www8.hp.com/hpmatter/issue-no-3-winter-2015/video-seeing-doctor-future

Kaiser Permanente and other companies are applying everything from telehealth to monitoring sensors to mobile clinics to reimagine the conventional doctor’s appointment.

Tomi Engdahl says:

Davos 2015: Less Innovation, More Regulation, More Unrest. Run Away!

http://news.slashdot.org/story/15/01/27/0125221/davos-2015-less-innovation-more-regulation-more-unrest-run-away

Growing income inequality was one of the top four issues at the 2015 World Economic Forum meeting in Davos, Switzerland, ranking alongside European adoption of quantitative easing and geopolitical concerns. Felix Salmon, senior editor at Fusion, said there was a consensus that global inequality is getting worse, fueling overriding pessimism at the gathering. The result, he said, could be that the next big revolution will be in regulation rather than innovation.

Davos: Inequality Causes Concern, Few Expect Improvement

http://cfi.co/europe/2015/01/davos-inequality-causes-concern-few-expect-improvement/

A semblance of calm has returned to Davos. The World Economic Forum (WEF) has finished this year’s proceedings and most of the 2,633 participants have returned home. Crews are busy disassembling the vast quantities of hi-tech hardware that provided security, communications, and other conveniences for the event.

“On the eve of the Davos get-together, anti-poverty organisation Oxfam International released a study showing that by this time next year the wealthiest 1% of the world population will own assets equal in value to those held by the remaining 99%.”

“Governments would be well-advised to use more imaginative ways of rebalancing incomes with policies supporting the creation of more and better jobs at the lower level while simultaneously investing in education.”

According to Anne-Marie Slaughter of the New America Foundation, a US think-tank, government leaders the world over seem unable to ensure or deliver social cohesion

Though its flagship meeting in Davos has now ended, the World Economic Forum is by no means done.

Tomi Engdahl says:

Why Coding Is Not the New Literacy

http://news.slashdot.org/story/15/01/27/0043257/why-coding-is-not-the-new-literacy

There has been a furious effort over the past few years to bring the teaching of programming into the core academic curricula. Enthusiasts have been quick to take up the motto: “Coding is the new literacy!” But long-time developer Chris Granger argues that this is not the case: “When we say that coding is the new literacy, we’re arguing that wielding a pencil and paper is the old one. Coding, like writing, is a mechanical act. All we’ve done is upgrade the storage medium. …”

He further suggests that if anything, the “new” literacy should be modeling — the ability to create a representation of a system that can be explored or used.

Coding is not the new literacy

http://www.chris-granger.com/2015/01/26/coding-is-not-the-new-literacy/

Despite the good intentions behind the movement to get people to code, both the basic premise and approach are flawed. The movement sits on the idea that “coding is the new literacy,” but that takes a narrow view of what literacy really is.

Coding is not the fundamental skill

Modeling is the new literacy

Modeling is creating a representation of a system (or process) that can be explored or used.

This definition encompasses a few skills, but the most important one is specification. In order to represent a system, we have to understand what it is exactly, but our understanding is mired in assumptions.

Defining a system or process requires breaking it down into pieces and defining those, which can then be broken down further. It is a process that helps acknowledge and remove ambiguity and it is the most important aspect of teaching people to model. In breaking parts down we can take something overwhelmingly complex and frame it in terms that we understand and actions we know how to do.

The parallel to programming here is very apparent: writing a program is breaking a system down until you arrive at ideas that a computer understands and actions it knows how to do. In either case, we have to specify our system and we do that through a process of iterative crafting.

Creation is exploration

We create models by crafting them out of material.

Sometimes our material is wood, metal, or plastic. Other times it’s data or information from our senses. Either way, we start our models with a medium that we mold.

The process of creating a model is an act of discovery – we find out what pieces we need as we shape our material. This means we needn’t fully specify a system to get started, we can simply craft new pieces as we go.

Exploration is understanding

Physical modeling teaches us the value of being able to take things apart, whether that’s removing every single screw and laying the whole engine block out on the garage floor or just removing the alternator and having a look. By pulling something apart we can directly explore what makes it up. This is why interfaces in movies like Iron Man are so compelling – they allow us to just dive in.

Imagine what we could learn if we had the ability to break anything down, to reach inside it, and see what that little bit there does. The more ways we find to represent systems such that we retain that ability, the more power we will have to understand complex things.

Digital freedom

By transposing our models to a computer, we can offload the work necessary to change, simulate, and verify.

There are a number of tools that already help us do this – from Matlab to Quartz Composer – but Excel is unquestionably the king

A fundamental disconnect

Coding requires us to break our systems down into actions that the computer understands, which represents a fundamental disconnect in intent. Most programs are not trying to specify how things are distributed across cores or how objects should be laid out in memory. We are not trying to model how a computer does something.3 Instead, we are modeling human interaction, the weather, or spacecraft. From that angle, it’s like trying to paint using a welder’s torch. We are employing a set of tools designed to model how computers work, but we’re representing systems that are nothing like them

How we teach children

We are natural-born modelers and we learn by creating representations that we validate against the world. Our models often start out too simplistic or even obviously wrong, but that’s perfectly acceptable (and arguably necessary8), as we can continue to update them as we go. Any parent could give you examples of how this plays out in their children, though they may not have fully internalized that this is what’s happening.

As such, we have to teach children how modeling happens,10 which we can break down into four distinct processes:

Specification: How to break down parts until you get to ideas and actions you understand.

Validation: How to test the model against the real world or against the expectations inside our heads.

Debugging: How to break down bugs in a model. A very important lesson is that an invalid model is not failure, it just shows that some part of the system behaves differently than what we modeled.

Exploration: How to then play with the model to better understand possible outcomes and to see how it could be used to predict or automate some system.

How we should teach adults

Realistically, we should be teaching ourselves the same things, but unfortunately adult education rarely allows for undirected exploration (we’re just trying to get something done).

If we assume that at some point better tools for modeling come into existence12, then being able to model some system or process may be all you need to automate it.

“The computer revolution hasn’t happened yet”

Tomi Engdahl says:

Professor: Young People Are “Lost Generation” Who Can No Longer Fix Gadgets

http://tech.slashdot.org/story/15/01/04/1837235/professor-young-people-are-lost-generation-who-can-no-longer-fix-gadgets

“Young people in Britain have become a lost generation who can no longer mend gadgets and appliances because they have grown up in a disposable world, the professor giving this year’s Royal Institution Christmas lectures has warned.”

” Prof George claims that many broken or outdated gadgets could be fixed or repurposed with only a brief knowledge of engineering and electronics. ”

Young people are ‘lost generation’ who can no longer fix gadgets, warns professor

http://www.telegraph.co.uk/news/science/science-news/11298927/Young-people-are-lost-generation-who-can-no-longer-fix-gadgets-warns-professor.html

Danielle George, Professor of Radio Frequency Engineering, at the University of Manchester, claims that the under 40s expect everything to ‘just work’ and have no idea what to do when things go wrong.

Unlike previous generations who would ‘make do and mend’ now young people will just chuck out their faulty appliances and buy new ones.

This year’s Royal Institution Christmas Lectures are entitled ‘Sparks will fly: How to hack your home’ she is hoping it will inspire people to think what else they can do with common household objects.

“All of these things in our home do seem to work most of the time and because they don’t break we just get used to them. They have almost become like Black Boxes which never die. And when they do we throw them away and buy something new.

“But there is now a big maker community who are thinking hard about what we do with all of these gadgets. They are remaking and repurposing things.

Hundreds of websites have emerged in the last few years where users post ideas about home hacks and electronics.

Professor George, added: I want young people to realise that that they have the power to change the world right from their bedroom, kitchen table or garden shed.

“Today’s generation of young people are in a truly unique position. The technology we use and depend on every day is expanding and developing at a phenomenal rate and so our society has never been more equipped to be creative and innovative.

“It was the first time I realised how mathematics and physics could be used in a practical and useful way and I knew immediately that this kind of hands-on investigation was what I wanted to do in life.

“If we all take control of the technology and systems around us, and think creatively, then solving some of the world’s greatest challenges is only a small step away. I believe everyone has the potential to be an inventor.”

Tomi Engdahl says:

Tiffany Shlain wants us all to unplug our gadgets every now and again

http://www.engadget.com/2015/01/08/tiffany-shlian-interview/?utm_source=Feed_Classic_Full&utm_medium=feed&utm_campaign=Engadget&?ncid=rss_full

Tiffany Shlain, the Emmy-nominated filmmaker and host of AOL’s The Future Starts Here is steeped in technology. That’s why it may surprise you to learn that she insists that her family, for one day each week, ditches their smartphones and tablets to indulge in a simpler life. These “technology shabbats” are one of the ways that she’s learned to unplug, relax and reconnect with her humanity. In her mind, technology’s enormous power for good is great, but it’s also dangerous — shortening our attention spans and sending our amygdalae into overdrive.

Tomi Engdahl says:

Disturbed by Superhuman?

http://www.eetimes.com/author.asp?section_id=36&doc_id=1325426&

How much of this advancement toward “superhuman” abilities enabled by technological means will be deemed socially legitimate and psychologically healthy?

“Faster, Higher, Stronger” is a well-known Olympics motto. The noble goal speaks volumes of the eternal human desire for self-improvement. It’s not just athletes who share that goal. We all aspire to be better — smarter and more efficient — in the way we study, work and play.

The big question in my mind lately, though, is how much of this advancement toward “superhuman” abilities enabled by biological, chemical, electrical and mechanical means will be deemed socially legitimate and psychologically healthy.

On one hand, technologists foresee a future when humans and machines will converge into “technological singularity.” On the other hand, many of us worry that someday we will be controlled by machines, not the other way around.

But here’s the paradox. We celebrate when technology helps the disabled get better. I’d love to see my wheelchair-bound mother walk again. But some of us find it disquieting when the perfectly capable turn themselves superhuman via drugs, genetic alteration, electronics implants, or even via wearable devices.

Where you draw the line?

But I also think that we should never underestimate consumers’ deep-seated distrust of machines. A centuries-old human instinct — machines vs. humans — rears its ugly head again at every turn of technological evolution. Arnold Schwarzenegger turned this fear into a veritable franchise in the “Terminator” films.

Why am I uncomfortable among people with AR glasses?

I’m wearing glasses, too

I sense that society is more ready than ever before to embrace new technologies that enable human efficiency. But that apparent willingness clashes with last year’s Google Glass backlash. I don’t think that even the smart marketeers at Google saw that coming.

Tomi Engdahl says:

This Entrepreneur Quit His Day Job To Make A Little Rubber Thing For Your Headphones

http://techcrunch.com/2015/01/24/this-entrepreneur-quit-his-day-job-to-make-a-little-rubber-thing-for-your-headphones/?ncid=rss&cps=gravity_1462_4576528484411117420

A cursory search under “headphone management” will bring up virtually hundreds of products. Spoolee’s novel contribution to the category is a twig adrift in an ocean of competition. This seems like a small and unusual product for us to cover but there are three things that make this product stand out and that are worth mentioning:

1. The industrial designer who created it—Ray Walker—quit a lucrative day job to go after this startup dream of his. That’s mostly what I want to talk about.

2. It is pretty clever…definitely filed under “holy crap, why didn’t I think of that.”

3. It mostly works as advertised.

But first the basics: what is it and how does it work. According to Ray, the idea for Spoolee came pretty quickly as he was solving a problem mentioned to him by his wife: her headphones kept getting knotted up and that was annoying. We’ve all been there.

So Ray created Spoolee—which recently completed it’s Kickstarter for funding—to solve this problem. Spoolee is a neoprene loop that fits on your finger, like a ring, and makes it super easy to roll your headphones around it until it’s fully wrapped. A Velcro loop, keeps it from unwinding.

But what doesn’t make sense is what it is that drives a person give up a good, stable job in order to make little neoprene rings? It seems like a big risk for something so small.

Part of it is probably the same force that made this guy become an industrial designer in the first place—a need to create. There has to be more to it than that, though…more than just a need to create and solve problems. What is the intangible force that caused this enterprise become a startup? Where did that transition to entrepreneur happen? What pushes a person over the edge to burn the ships on the shore and commit?

Steve Siebold described the wealthy in his contentious and possibly mistitled story for Business Insider, by saying that “the wealthy are comfortable being uncomfortable” and “the wealthy dream about the future”.

Tomi Engdahl says:

Talent management

21st-Century Talent Spotting

https://hbr.org/2014/06/21st-century-talent-spotting

Why did the CEO of the electronics business, who seemed so right for the position, fail so miserably? And why did Algorta, so clearly unqualified, succeed so spectacularly? The answer is potential: the ability to adapt to and grow into increasingly complex roles and environments. Algorta had it; the first CEO did not.

Having spent 30 years evaluating and tracking executives and studying the factors in their performance, I now consider potential to be the most important predictor of success at all levels, from junior management to the C-suite and the board.

With this article, I share those lessons. As business becomes more volatile and complex, and the global market for top professionals gets tighter, I am convinced that organizations and their leaders must transition to what I think of as a new era of talent spotting—one in which our evaluations of one another are based not on brawn, brains, experience, or competencies, but on potential.

A New Era

The first era of talent spotting lasted millennia. For thousands of years, humans made choices about one another on the basis of physical attributes.

I was born and raised during the second era, which emphasized intelligence, experience, and past performance. Throughout much of the 20th century, IQ—verbal, analytical, mathematical, and logical cleverness—was justifiably seen as an important factor in hiring processes (particularly for white-collar roles), with educational pedigrees and tests used as proxies. Much work also became standardized and professionalized. Many kinds of workers could be certified with reliability and transparency, and since most roles were relatively similar across companies and industries, and from year to year, past performance was considered a fine indicator. If you were looking for an engineer, accountant, lawyer, designer, or CEO, you would scout out, interview, and hire the smartest, most experienced engineer, accountant, lawyer, designer, or CEO.

I joined the executive search profession in the 1980s, at the beginning of the third era of talent spotting, which was driven by the competency movement still prevalent today.

Now we’re at the dawn of a fourth era, in which the focus must shift to potential. In a volatile, uncertain, complex, and ambiguous environment (VUCA is the military-acronym-turned-corporate-buzzword), competency-based appraisals and appointments are increasingly insufficient. What makes someone successful in a particular role today might not tomorrow if the competitive environment shifts, the company’s strategy changes, or he or she must collaborate with or manage a different group of colleagues. So the question is not whether your company’s employees and leaders have the right skills; it’s whether they have the potential to learn new ones.

Unfortunately, potential is much harder to discern than competence (though not impossible, as I’ll describe later).

The recent noise about high unemployment rates in the United States and Europe hides important signals: Three forces—globalization, demographics, and pipelines—will make senior talent ever scarcer in the years to come.

The impact of demographics on hiring pools is also undeniable. The sweet spot for rising senior executives is the 35-to-44-year-old age bracket, but the percentage of people in that range is shrinking dramatically.

The third phenomenon is related and equally powerful, but much less well known: Companies are not properly developing their pipelines of future leaders.

In many companies, particularly those based in developed markets, I’ve found that half of senior leaders will be eligible for retirement within the next two years, and half of them don’t have a successor ready or able to take over. As Groysberg puts it, “Companies may not be feeling pain today, but in five or 10 years, as people retire or move on, where will the next generation of leaders come from?”

How can you tell if a candidate you’ve just met—or a current employee—has potential? By mining his or her personal and professional history, as I’ve just done with Algorta’s. Conduct in-depth interviews or career discussions, and do thorough reference checks to uncover stories that demonstrate whether the person has (or lacks) these qualities. For instance, to assess curiosity, don’t just ask, “Are you curious?” Instead, look for signs that the person believes in self-improvement, truly enjoys learning, and is able to recalibrate after missteps. Questions like the following can help:

How do you react when someone challenges you?

How do you invite input from others on your team?

What do you do to broaden your thinking, experience, or personal development?

How do you foster learning in your organization?

What steps do you take to seek out the unknown?

Always ask for concrete examples, and go just as deep in your exploration of motivation, insight, engagement, and determination. Your conversations with managers, colleagues, and direct reports who know the person well should be just as detailed.

Researchers have found that while the best interviewers’ assessments have a very high positive correlation with the candidates’ ultimate performance, some interviewers’ opinions are worse than flipping a coin.

By contrast, companies that emphasize the right kind of hiring vastly improve their odds.

“Pushing your high potentials up a straight ladder won’t accelerate their growth—uncomfortable assignments will.”

Your final job is to make sure your stars live up to the high potential you’ve spotted in them by offering development opportunities that push them out of their comfort zones.

Pushing your high potentials up a straight ladder toward bigger jobs, budgets, and staffs will continue their growth, but it won’t accelerate it. Diverse, complex, challenging, uncomfortable roles will. When we recently asked 823 international executives to look back at their careers and tell us what had helped them unleash their potential, the most popular answer, cited by 71%, was stretch assignments. Job rotations and personal mentors, each mentioned by 49% of respondents, tied for second.

Tomi Engdahl says:

Ask Slashdot: What Makes a Great Software Developer?

http://ask.slashdot.org/story/15/01/27/2340240/ask-slashdot-what-makes-a-great-software-developer

What does it take to become a great — or even just a good — software developer? According to developer Michael O. Church’s posting on Quora (later posted on LifeHacker), it’s a long list: great developers are unafraid to learn on the job, manage their careers aggressively, know the politics of software development (which he refers to as ‘CS666′), avoid long days when feasible, and can tell fads from technologies that actually endure… and those are just a few of his points.

What Makes a Great Software Developer?

http://news.dice.com/2015/01/27/makes-great-software-developer/?CMPID=AF_SD_UP_JS_AV_OG_DNA_

What does it take to become a great—or even just a good—software developer?

According to developer Michael O. Church’s posting on Quora (later posted on LifeHacker), developers who want to compete in a highly competitive industry should be unafraid to learn on the job; manage their careers aggressively; recognize under- and over-performance (and avoid both); know the politics of software development (which he refers to as “CS666”); avoid fighting other people’s battles; and physically exercise as often as possible.

That’s not all: Church feels that developers should also manage their hours (and avoid long days when feasible), learn as much as they can, and “never apologize for being autonomous or using your own time.”

Whether or not you subscribe to Church’s list, he makes another point that’s valuable to newbie and experienced developers alike: Recognize which technologies endure, and which will quickly fade from the scene. “Half of the ‘NoSQL’ databases and ‘big data’ technologies that are hot buzzwords won’t be around in 15 years,” he wrote. “On the other hand, a thorough working knowledge of linear algebra (and a lack of fear with respect to the topic!) will always suit you well.” While it’s good to know what’s popular, he added, “You shouldn’t spend too much time [on fads].” (Guessing which are fads, however, takes experience.)

His own conclusion: If you want to become a great programmer or developer, learn to slow down.

“If you create something with a solid foundation that is usable, maintainable and meets a real need, it will be as relevant when you finally bring it to market as it was when you came up with the idea, even if it took you much longer than you anticipated,” Gertner wrote. “In my experience, projects fail far more often because the software never really works properly than because they missed a tight market window.”

But that hasn’t stopped many developers from embracing the concept of speed, even if it means skipping over things like code reviews.

“We need to recognize that our job isn’t about producing more code in less time, it’s about creating software that is stable, performant [sic], maintainable and understandable.”

What I Wish I Knew When Starting Out as a Software Developer: Slow the Fuck Down

http://blog.salsitasoft.com/what-i-wish-i-knew-when-starting-out-as-a-software-developer-slow-the-fuck-down/

Most obviously, Michael urges young programmers deciding how much effort to put into their work to “ebb towards underperformance”. He goes on to impart a wealth of useful tips for navigating corporate politics and coming out on top.

More to the point, however, is that only one of Michael’s fourteen points (“Recognize core technological trends apart from fluff”) is specific to software development at all. The rest are good general career advice whatever your line of work. The implication of the article’s title (“…as a software developer”) is that it should be somehow specific to our industry, and that’s how I would approach the question.

When I was a teenager, I thought I was a truly great programmer. I could sit down and bang out a few hundred lines of C or Pascal and have them compile and run as expected on the first try. I associated greatness in software development with an ability to solve hard technical problems and a natural, almost instinctive sense of how to translate my solutions into code.

Even more than that, I associated it with speed.

It wasn’t until after university that cracks in my self-satisfaction started to show.

The most striking lesson I have learned is that timeframes that seem absurdly bloated beforehand tend to look reasonable, even respectable, at the end of a project. Countless times over the course of my career, my colleagues and I have agreed that a project couldn’t possibly take more than, say, three months. Then it ends up taking nine months or twelve months or more (sometimes much more). But looking back, with all the details and pitfalls and tangents in plain sight, you can see that that the initial estimate was hopelessly unrealistic. If anything, racing to meet it only served to slow the project down.

In my experience, projects fail far more often because the software never really works reliably than because they missed a tight market window.

And yet the vast majority of developers still seem relunctant to spend time on unit tests, design documentation and code reviews (something I am particularly passionate about). There is a widespread feeling that our job is about writing code, and that anything else is a productivity-killing distraction.

We need to recognize that our job isn’t about producing more code in less time, it’s about creating software that is stable, performant, maintainable and understandable (to you or someone else, a few months or years down the road).

What I Wish I Knew When I Started My Career as a Software Developer

http://lifehacker.com/what-i-wish-i-knew-when-i-started-my-career-as-a-softwa-1681002791

1. Don’t be afraid to learn on the job.

2. Manage your career aggressively. Take responsibility for your own education and progress.

3. Recognize under-performance and over-performance and avoid them.

4. Never ask for permission unless it would be reckless not to. Want to spend a week investigating something on your own initiative? Don’t ask for permission. You won’t get it.

5. Never apologize for being autonomous or using your own time.

6. Learn CS666 (what I call the politics of software development) and you can usually forget about it. Refuse to learn it, and it’ll be with you forever.

7. Don’t be quixotic and try to prove your bosses wrong. When young engineers feel that their ideas are better than those of their superiors but find a lack of support, they often double down and throw in a lot of hours.

8. Don’t fight other peoples’ battles. As you’re young and inexperienced, you probably don’t have any real power in most cases. Your intelligence doesn’t automatically give you credibility.

9. Try to avoid thinking in terms of “good” versus “bad.” Be ready to play it either way. Young people, especially in technology, tend to fall into those traps—labeling something like a job or a company “good” or “bad” and thus reacting emotionally and sub-optimally.

10. Never step back on the salary scale except to be a founder. As a corollary, if you step back, expect to be treated as a founder. A 10% drop is permissible if you’re changing industries

11. Exercise. It affects your health, your self-confidence, your sex life, your poise and your career. That hour of exercise pays itself off in increased productivity.

12. Long hours: sometimes okay, usually harmful. The difference between 12% growth and 6% growth is meaningful.

13. Recognize core technological trends apart from fluff. Half of the “NoSQL” databases and “big data” technologies that are hot buzzwords won’t be around in 15 years. On the other hand, a thorough working knowledge of linear algebra (and a lack of fear with respect to the topic!) will always suit you well. There’s a lot of nonsense in “data science” but there is some meat to it.

14. Finally, learn as much as you can. It’s hard. It takes work. This is probably redundant with some of the other points, but once you’ve learned enough politics to stay afloat, it’s important to level up technically.

Tomi Engdahl says:

Fried cable sparked EE profession

http://www.edn.com/electronics-blogs/designcon-central-/4438499/Fried-cable-sparked-EE-profession?_mc=NL_EDN_EDT_EDN_today_20150128&cid=NL_EDN_EDT_EDN_today_20150128&elq=7cb8d2404532459b8cb7dd6b1e39e3ba&elqCampaignId=21374

A fried transatlantic telegraph cable sparked the birth of the electrical engineering profession, a bizarre beginning described by a keynoter at DesignCon.

The work of paper baron Cyrus West Field on a cable connecting Newfoundland and Ireland in the mid-1800s inadvertently turned “electrical engineering from an amateur hobby to a full-on profession,” said Thomas H. Lee (below), a Stanford professor and entrepreneur. “EEs have been making trouble ever since,” he said.

It was Field’s failure with his first big effort that spawned the unexpected success. The 1857 cable was only an inch in diameter and performed horribly on its mission of delivering one to two words a minute for $10/word.

To improve the connection, the chief electrician on the project, E.O. Wildman Whitehouse, increased voltage across the 3,000 km line until at 1,500V it was fried. A scandal followed in which the public thought the cable and Field were frauds.

A British commission investigated the failure. It put inventor William Thomson (later Lord Kelvin) in charge of a second effort and called for standards in what had been known as the electric arts where there were no metrics or degree programs.

Thomson led the design of a better, thicker cable that ultimately carried eight to ten words a minute, still at the whopping price of $10/word

As a result of the British investigation, the first standards of measure for electricity were created in the 1870s — the ampere, ohm, and volt.

“Today we produce 100 transistors for every ant and that is still growing exponentially — that’s amazing to me,”

Tomi Engdahl says:

Moore’s Law extends to cover human progress

http://www.edn.com/electronics-blogs/eye-on-standards/4438486/Moore-s-Law-extends-to-cover-human-progress?_mc=NL_EDN_EDT_EDN_today_20150128&cid=NL_EDN_EDT_EDN_today_20150128&elq=7cb8d2404532459b8cb7dd6b1e39e3ba&elqCampaignId=21374

Moore’s Law, famous for predicting the exponential growth of computing power over 40 years, comes from a simple try-fail/succeed model of incremental improvement. The predictive success of Moore’s Law seems uncanny, so let’s take a closer look to get an idea of where it comes from.

Gordon Moore proposed that, as long as there is incentive, techniques will improve, components will shrink, prices will scale, the cycle will repeat and we’ll continue to see exponential growth. Van Buskirk has evidence that the driving incentive is not limited to economic supply and demand arguments but can also include far weaker incentives such as simply being aware that a quality is desirable for moral or aesthetic reasons.

Moore’s-like laws emerge from the simple fact that you and all of your colleagues keep on making improvements as long as you’re both motivated and improvements are possible. As far as the latter requirement is concerned, I don’t have to tell you how clever engineers can be. While no one is going to violate the laws of thermodynamics—no perpetual motion machines or perfect engines—engineers are pretty good at pushing right up to those constraints and sometimes finding loopholes.

Start with something—a system or a doodad—that works with some proficiency. Along with thousands of other engineers, you set out to improve your doodad. You try stuff. Most of the stuff you try doesn’t work, but now and then, you improve the performance of the doodad by some percentage, maybe 0.1%, maybe 10%, maybe you have a disrupting idea that improves it by 1000%. Mostly, we experience many small, 1%-ish improvements. Meanwhile, all of your colleagues around the world are pushing the same envelope but in different directions.

The improvement time scale for Moore’s original law is about 18 months.

But how far we have to reach to pluck the proverbial fruit changes after each innovation. Figure 5 shows a model as above, but with randomly changing bell curves after each innovation.

I’m out of space here, but the point I want to make is that, as a problem solver, regardless of your field of endeavor, you throw those dice every day and Moore’s Law is the quantitative result of the interplay of your successes and failures. As a sentient dice thrower, you can affect the rate of improvement—maybe not across the whole development process, Moore’s 40 years, but within sets of a few years you can crank it up.

Try more ideas, as many different ways to improve your literal or figurative widget as you can think of.

Do the easy ones first.

Forget the distinction between success and failure, just throw the dice.

Don’t let your own prejudices trip you up, just try more things faster.

Tomi Engdahl says:

10 standards for effective tech standards

http://www.edn.com/electronics-blogs/designcon-central-/4438516/10-standards-for-effective-tech-standards?_mc=NL_EDN_EDT_EDN_today_20150129&cid=NL_EDN_EDT_EDN_today_20150129&elq=9fe2efc4f23841079a3d28d6b095ed98&elqCampaignId=21403

Bartleson’s 10 commandments for effective standards

1. Cooperate on standards; compete on products: Bartleson calls this “the golden rule” of the standards process. She describes it as being mature enough to cooperate to create a standard, yet savvy enough to later use the standard in competing products.

2. Use caution when mixing patents and standards: Patents and standards are contentious and powerful. Mix them with care.

3. Know when to stop: There are things that should not be standardized.

4. Be truly open: “‘Open’ can mean different things to different people,” Bartleson said. It does not necessarily mean free. But if a standard is truly a standard, participation in its standard process should be available to all and its technologies should be available to all.

5. Realize there is no neutral party: Recognize that “everyone who participates in a standard committee has a reason for being there,” she noted.

6. Leverage existing organizations and proven processes: Modeling a new standard’s process off of the successful procedures of an existing group can save time and effort.

7. Think relevance: “The biggest measure of a standard is its adoption,” Bartleson said.

8. Recognize there is more than one way to create a standard: Different groups have different needs. Processes must be adjusted.

9. Start with contributions, not from scratch: Do not create a situation that lends itself toward dominance by any contributor to the standard who may build up from a sole perspective.

10. Know that standards have technical and business aspects: This can be a big struggle, but don’t forget that there’s a business side to what happens in a standard process.

At minimum, she said, “Respect the standards that are around you and the people who created them.”

Tomi Engdahl says:

What Drove CES 2015 Innovation? IP and IP Subsystems

http://www.eetimes.com/author.asp?section_id=36&doc_id=1325454&

How do we manage all those blocks in an age of exploding block usage?

If you want to see what electronic design innovation is all about these days, come to the Consumer Electronics Show.

the array of technology development showcased here the first week of 2015 was breathtaking. The Sands was packed with almost countless wearables vendors, IOT systems houses, and 3-D printers

But for these guys — from a market standpoint — there’s a shakeout ahead: There are too many vendors in the wearables and IOT space making too-similar products.

Rise of IP subsystems

What’s enabling these systems innovations is of course IP. You’ve no doubt seen the slideware showing that the number of IP blocks in an average SoC has crested 100 and is moving quickly north. That’s 10 times the number of blocks than we designed in just a few short years ago.

This explosion in block usage is creating its own design complexity (how do we manage all those blocks?).

“Instead of dealing with SoC design at the lowest common denominator — the discrete IP block, SoC designers now look to move up a layer of abstraction to design with system level functionality to reduce the effort and cost associated with complex SoC designs today.”

“the start of a period in which large SIP providers will exert a concerted effort to create IP subsystems, combining many discrete IP blocks into larger, more converged IP products to offer better performance and to reduce the cost of IP integration into complex SoCs.”

Semico forecasts the IP subsystem market will double from $108 million in 2012 to nearly $350 million in annual sales in 2017. IP providers clearly understand that delivering IP is just one piece of the puzzle and that to enable system development there needs to be a subsystems push as well.

This trend — and the systems it enables — is going to drive much more rapid innovation in the months and years ahead.

Tomi Engdahl says:

Fried cable sparked EE profession

http://www.edn.com/electronics-blogs/designcon-central-/4438499/Fried-cable-sparked-EE-profession?_mc=NL_EDN_EDT_EDN_funfriday_20150130&cid=NL_EDN_EDT_EDN_funfriday_20150130&elq=47a3b26655094f67a2a1d5f7c693ee19&elqCampaignId=21415

Tomi Engdahl says:

This is normally the way consumer tech works – people have a vision a long time before the technology is really there to implement it (remember the Newton, or the Nokia 7560).

Today it sometimes seems like things are the other way around. Want to make a connected door lock? Camera collar for your dog? Intelligent scale? Eye tracker? The electronic components all there, more or less off the shelf. The challenge is in the vision for what the product should be, what people would do with it and how you would take it to market. That is, hardware has lapped software, so to speak.

The challenges, I think, come from where the intelligence and the software will actually sit, and how much there needs to be. If much of the hardware tech is a commodity, then so is the hardware itself, unless you can find a way to create an addition layer of value with software and service and experience (and design, as with Ringly). It’s the intelligence and the software that makes the difference between a commodity widget and something that has some real value for an entrepreneur (from outside or inside China). Not all device types really have network effects, and not all devices can be the hub of your connected home, at least not all at once. Some categories are going to be commodity widgets.

One way to get at this problem is to work out what the SKU might look like at retail, and who will sell it. Is it the Apple Store, Home Depot, Best Buy or your alarm company? Is it a point solution, or an add-on for an industry-standard platform, or an accessory for your Nest or iPhone, or Apple Television? Different channels will produce different results.

Nest found a clear, easy-to-communicate use case with lots of scope to avoid commoditization through software, and also scope to be used as a hub – a trojan horse – to sell other things. Now your car will tell your Nest to turn on the heating when you’re almost home. Conversely things like Apple’s HomeKit and Quirky’s Wink (Quirky is an a16z investment) aim for a flatter structure – your phone itself is the hub, and you can buy whatever point solution you want.