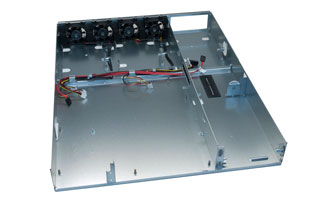

Facebook Open Sources Its Servers and Data Centers. Facebook has shared many details of its new server and data center design on Building Efficient Data Centers with the Open Compute Project article and project. Open Compute Project effort will bring this web scale computing to the masses. The new data center is designed for AMD and Intel and the x86 architecture.

You might ask Why Facebook open-sourced its datacenters? The answer is that Facebook has opened up a whole new front in its war with Google over top technical talent and ad dollars. “By releasing Open Compute Project technologies as open hardware,” Facebook writes, “our goal is to develop servers and data centers following the model traditionally associated with open source software projects. Our first step is releasing the specifications and mechanical drawings. The second step is working with the community to improve them.”

By the by this data center approach has some similarities to Google data center designs, at least to details they have published. Despite Google’s professed love for all things open, details of its massive data centers have always been a closely guarded secret. Google usually talks about its servers once they’re obsolete.

Open Compute Project is not the first open source server hardware project. How to build cheap cloud storage article shows another interesting project.

107 Comments

Tomi Engdahl says:

Facebook expands Luleå, Sweden campus with new data center

http://www.lightwaveonline.com/articles/2018/05/facebook-expands-lule-sweden-campus-with-new-data-center.html?cmpid=enl_lightwave_lightwave_datacom_2018-05-08&pwhid=6b9badc08db25d04d04ee00b499089ffc280910702f8ef99951bdbdad3175f54dcae8b7ad9fa2c1f5697ffa19d05535df56b8dc1e6f75b7b6f6f8c7461ce0b24&eid=289644432&bid=2096346

Business Sweden said Facebook has announced plans to expand its Luleå, Sweden campus to over 100,000 m2 (1 million square feet) with the addition of a third data center (news confirmed via the Luleå campus’s very own Facebook page). The nearly 50,000 m2 data center will be operational in 2021, says Business Sweden.

In 2011, Facebook established the data center in Sweden. Facebook has invested $1.2 U.S. billion in the Luleå data center since then, says Business Sweden.

Tomi Engdahl says:

How Facebook configures its millions of servers every day

https://techcrunch.com/2018/07/19/how-facebook-configures-its-millions-of-servers-every-day/?sr_share=facebook&utm_source=tcfbpage

When you’re a company the size of Facebook with more than two billion users on millions of servers, running thousands of configuration changes every day involving trillions of configuration checks, as you can imagine, configuration is kind of a big deal. As with most things with Facebook, they face scale problems few companies have to deal and often reach the limits of mere mortal tools.

To solve their unique issues, the company developed a new configuration delivery process called Location Aware Delivery or LAD for short. Before developing LAD, the company had been using an open source tool called Zoo Keeper to distribute configuration data

memphis whole house generators says:

I have to thank you for the efforts you’ve

put in penning this website. I’m hoping to view the same high-grade blog posts by you in the future as well.

In fact, your creative writing abilities has inspired me

to get my own website now

Tomi Engdahl says:

Facebook Open Switching System (“FBOSS”) and Wedge in the open

https://engineering.fb.com/data-center-engineering/facebook-open-switching-system-fboss-and-wedge-in-the-open/

Today, we are happy to announce the initial release of our Facebook open switching system (code-named “FBOSS”) project on GitHub and our proposed contribution of the specification for our top-of-rack switch (code-named “Wedge”) to the OCP networking project.

The initial FBOSS release consists primarily of the FBOSS agent, a daemon that programs and controls the ASIC. This process runs on each switch and manages the hardware forwarding ASIC. It receives information via configuration files and thrift APIs and then programs the correct forwarding and routing entries into the chip. It also processes packets from the ASIC that are destined to the switch itself, such as control plane protocol traffic, and other packets that cannot be processed solely in hardware.

Our Wedge top-of-rack switch follows this basic design and uses a single Broadcom Trident II ASIC for high-speed forwarding.

Tomi Engdahl says:

Inspur contributes new data center rack management spec to OCP

https://www.cablinginstall.com/data-center/article/14196503/inspur-contributes-new-data-center-rack-management-spec-to-ocp

The company contends that rack-scale servers are 100% higher in deployment density and 10 times higher in delivery efficiency than traditional servers.

Tomi Engdahl says:

Microsoft reveals its MASSIVE data center (Full Tour)

https://www.youtube.com/watch?v=80aK2_iwMOs&feature=share

Tomi Engdahl says:

Stephen Nellis / Reuters:

AMD touts Meta as a data center chip customer, as it targets Nvidia with new chips like MI200, a family of server accelerators to boost ML and other workloads — Advanced Micro Devices Inc (AMD.O) on Monday said it has won Meta Platforms Inc (FB.O) as a data center chip customer …

AMD lands Meta as customer and takes on Nvidia, sending shares up 11%

https://www.reuters.com/technology/amd-lands-meta-customer-takes-aim-nvidia-with-new-supercomputing-chips-2021-11-08/

taken on November 2, 2021. REUTERS/Dado Ruvic/Illustration/File Photo

Nov 8 (Reuters) – Advanced Micro Devices Inc (AMD.O) on Monday said it has won Meta Platforms Inc (FB.O) as a data center chip customer, sending AMD shares up more than 11%as it cemented some of its gains against Intel Corp (INTC.O).

It also announced a range of new chips aimed at taking on larger rivals such as Nvidia Corp (NVDA.O) in supercomputing markets, as well as smaller competitors, including Ampere Computing in the cloud computing market.

n taken on November 2, 2021. REUTERS/Dado Ruvic/Illustration/File Photo

Nov 8 (Reuters) – Advanced Micro Devices Inc (AMD.O) on Monday said it has won Meta Platforms Inc (FB.O) as a data center chip customer, sending AMD shares up more than 11%as it cemented some of its gains against Intel Corp (INTC.O).

It also announced a range of new chips aimed at taking on larger rivals such as Nvidia Corp (NVDA.O) in supercomputing markets, as well as smaller competitors, including Ampere Computing in the cloud computing market.

After years of trailing the much larger Intel in the market for x86 processor chips, AMD has steadily gained market share since 2017, when a comeback plan spearheaded by Chief Executive Lisa Su put the company on a course to its present position of having faster chips than Intel’s.

AMD now has nearly a quarter of the market for x86 chips, according to Mercury Research.